About three weeks ago there was a big announcement about the update of the status of the Vulkan effort for the Raspberry Pi 4. Now the source code is public. Taking into account the interest that it got, and that now the driver is more usable, we will try to post status updates more regularly. Let’s talk about what’s happened since then.

Input Attachments

Input attachment is one of the main sub-features for Vulkan multipass, and we’ve gained support since the announcement. On Vulkan the support for multipass is more tightly supported by the API. Renderpasses can have multiple subpasses. These can have dependencies between each other, and each subpass define a subset of “attachments”. One attachment that is easy to understand is the color attachment: This is where a given subpass writes a given color. Another, input attachment, is an attachment that was updated in a previous subpass (for example, it was the color attachment on such previous subpass), and you get as a input on following subpasses. From the shader POV, you interact with it as a texture, with some restrictions. One important restriction is that you can only read the input attachment at the current pixel location. The main reason for this restriction is because on tile-based GPUs (like rpi4) all primitives are batched on tiles and fragment processing is rendered one tile at a time. In general, if you can live with those restrictions, Vulkan multipass and input attachment will provide better performance than traditional multipass solutions.

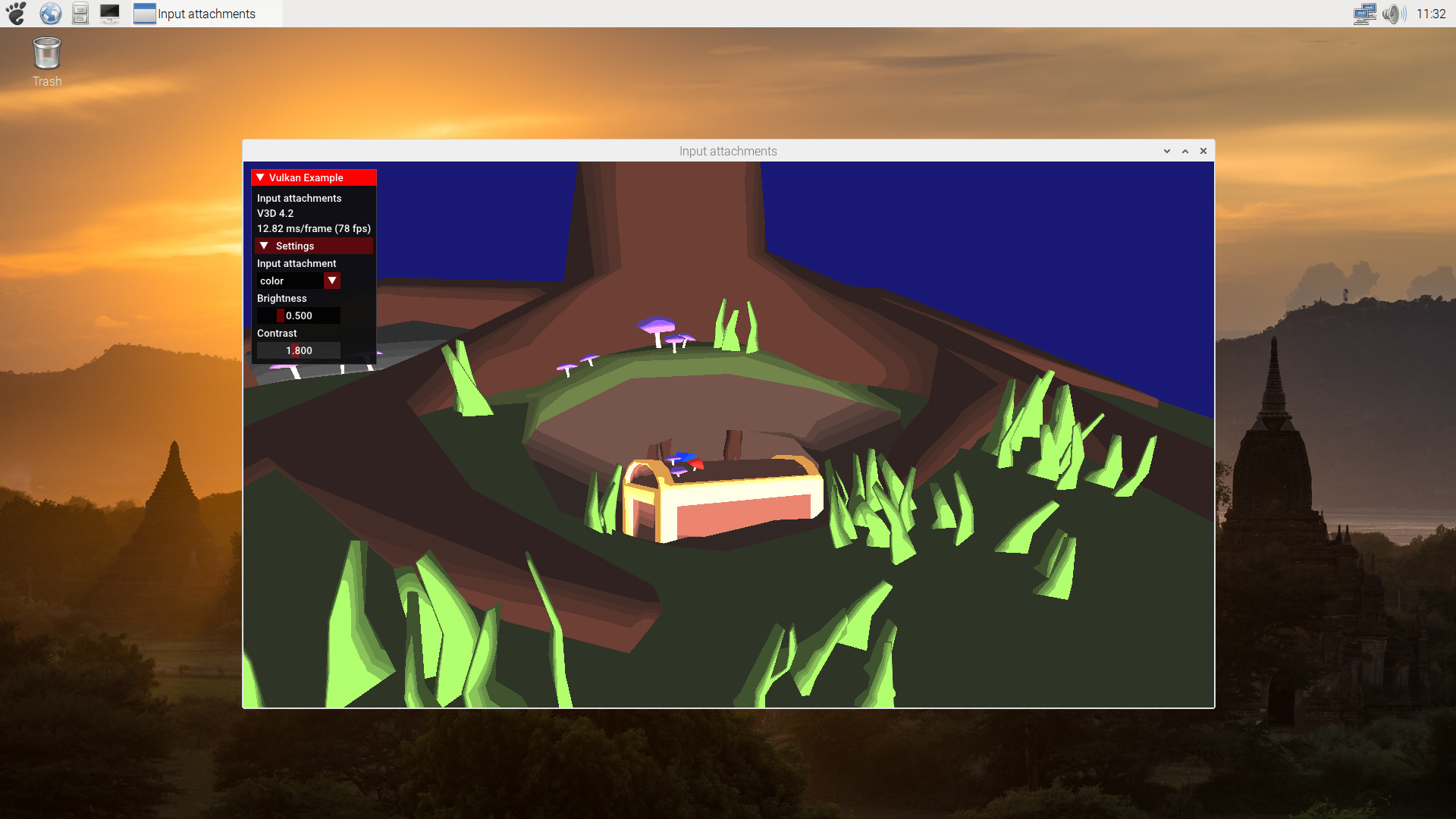

If you are interested in reading more details on this, you can check out ARM’s very nice presentation “Vulkan Multipass mobile deferred done right”, or Sascha Willems’ post “Vulkan input attachments and sub passes”. The latter also includes information about how to use them and code snippets of one of his demos. For reference, this is how the input attachment demos looks on the rpi4:

Compute Shader

Given that this was one of the most requested features after the last update, we expect that this will be likely be the most popular news from this post: Compute shaders are now supported.

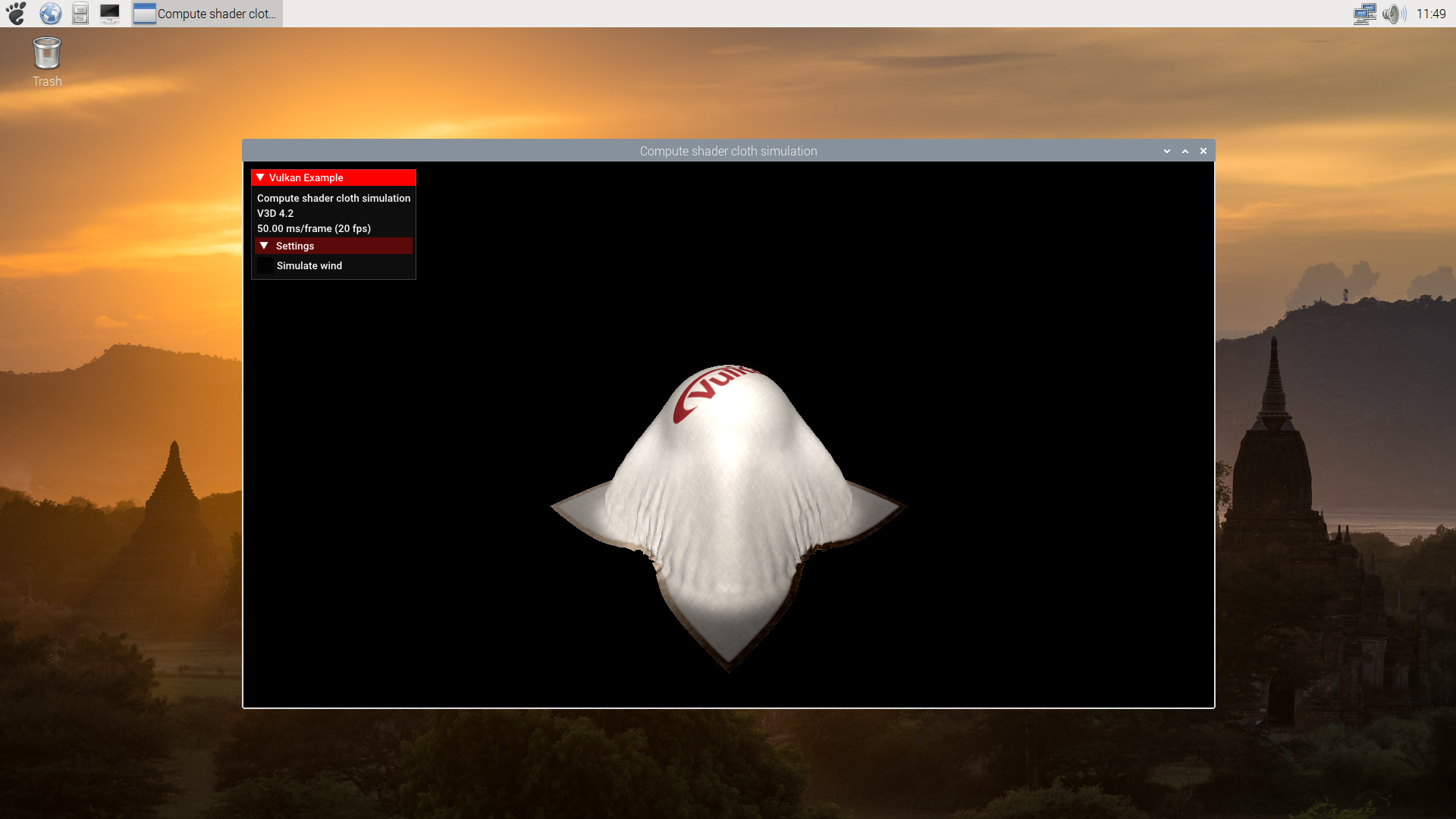

Compute shaders give applications the ability to perform non-graphics related tasks on the GPU, outside the normal rendering pipeline. For example they don’t have vertices as input, or fragments as output. They can still be used for massivelly parallel GPGPU algorithms. For example, this demo from Sascha Willems uses a compute shader to simulate cloth:

Storage Image

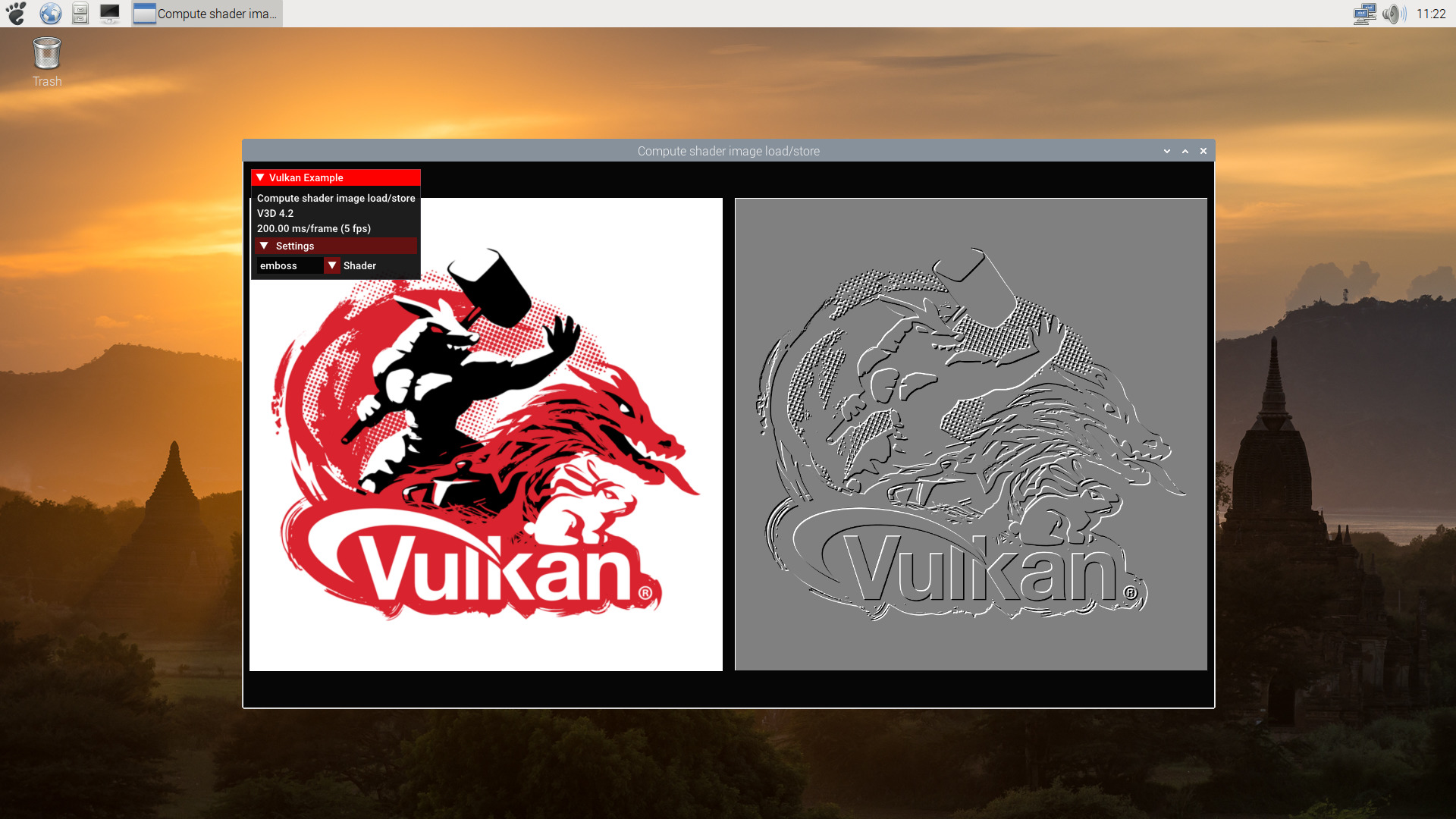

Storage Image is another recent addition. It is a descriptor type that represents an image view, and supports unfiltered loads, stores, and atomics in a shader. It is really similar in most other ways to the well-known OpenGL concept of texture. They are really common with compute shaders. Compute shaders will not render (they can’t) directly any image, and it is likely that if they need an image, they will update it. In fact the two Sascha Willem demos using storage images also require compute shader support:

Performance

Right now our main focus for the driver is working on features, targetting a compliant Vulkan 1.0 driver. Having said so, now that we both support a good range of features and can run non-basic applications, we have devoted some time to analyze if there were clear points where we could improve the performance. Among these we implemented:

1. A buffer object (BO) cache: internally we are allocating and freeing really often buffer objects for basically the same tasks, so there are a constant need of buffers of the same size. Such allocation/free require a DRM call, so we implemented a BO cache (based on the existing for the OpenGL driver) so freed BOs would be added to a cache, and reused if a new BO is allocated with the same size.

2. New code paths for buffer to image copies.

Bugfixing!!

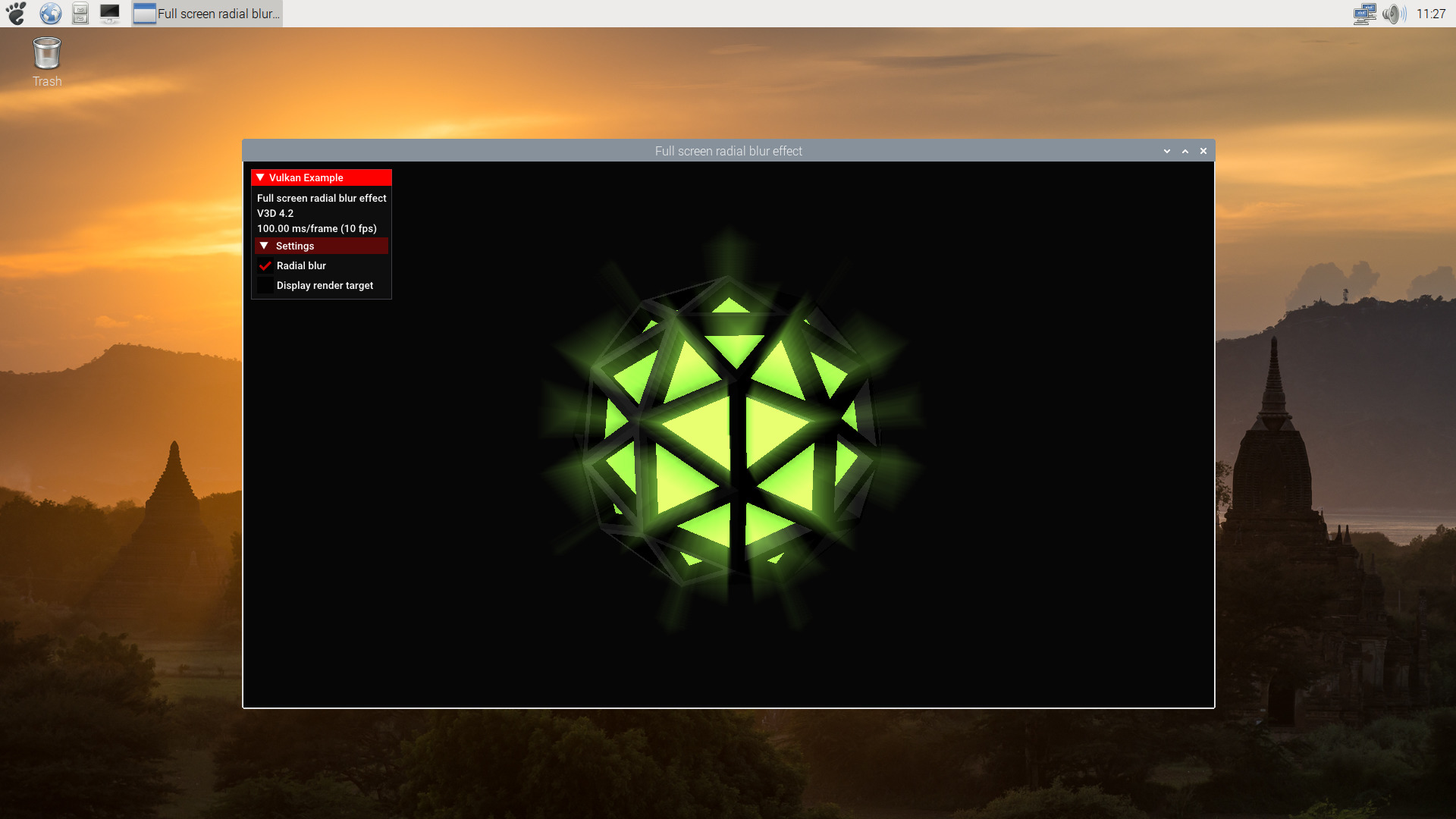

In addition to work on specific features, we also spent some time fixing specific driver bugs, using failing Vulkan CTS tests as reference. Thanks to that work, the Sascha Willems’ radial blur demo is now properly rendering, even though we didn’t focus specifically on working on that demo:

Next?

Now that the driver supports a good range of features and we are able to test more applications and run more Vulkan CTS Tests with all the needed features implemented, we plan to focus some efforts towards bugfixing for a while.

We also plan to start to work on implementing the support for Pipeline Cache, which allows the result of pipeline construction to be reused between pipelines and between runs of an application.

Congrats for achieving such big milestones! Good luck on fixing the remaining bugs and the upcoming performance optimization!

That’s great, I’m looking forward to raspberry-pi-4 running ncnn neural network library with vulkan compute.

https://github.com/Tencent/ncnn

Super progress!

Do you have an early idea of which `VkSubgroupFeatureFlagBits` will be supported?

Also, if Vulkan 1.2 compliance is the goal, then will `VkDeviceAddress` (and supporting extensions) be supported?

> Do you have an early idea of which `VkSubgroupFeatureFlagBits` will be supported?

VkSubgroupFeatureFlags is a Vulkan 1.1 feature. Right now our plan if getting Vulkan 1.0 core featureset/conformance. We still need to decide what would be the next step after that, if moving to support 1.1 core features or performance/code stabilization work. But it is likely that we would go for the latter first before moving to expand the features.

> Also, if Vulkan 1.2 compliance is the goal, then will `VkDeviceAddress` (and supporting extensions) be supported?

As mentioned, the first goal is 1.0 core compliance (although I thought that VkDeviceAddress was already defined on 1.0?). If we work on any extension, right now the priority would be for extensions that become core on following vulkan versions.