Encrypted Media Extensions (a.k.a. EME) is the W3C standard for encrypted media in the web. This way, media providers such as Hulu, Netflix, HBO, Disney+, Prime Video, etc. can provide their contents with a reasonable amount of confidence that it will make it very complicated for people to “save” their assets without their permission. Why do I use the word “serious” in the title? In WebKit there is already support for Clear Key, which is the W3C EME reference implementation but EME supports more encryption systems, even privative ones (I have my opinion about this, you can ask me privately). No service provider (that I know) supports Clear Key, they usually rely on Widevine, PlayReady or some other.

Three years ago, my colleague Žan Doberšek finished the implementation of what was going to be the shell of WebKit’s modern EME implementation, following latest W3C proposal. We implemented that downstream (at Web Platform for Embedded) as well using Thunder, which includes as a plugin a fork of what was Open Content Decryption Module (a.k.a. OpenCDM). The OpenCDM API changed quite a lot during this journey. It works well and there are millions of set-top-boxes using it currently.

The delta between downstream and the upstream GStreamer based WebKit ports was quite big, testing was difficult and syncing was not always easy, so we decided reverse the situation.

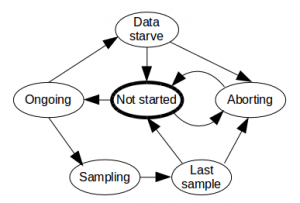

Our first step was done by my colleague Charlie Turner, who made Clear Key work upstream again while adapted some changes the Apple folks had done meanwhile. It was amazing to see Clear Key tests passing again and his work with the CDMProxy related classes was awesome. After having ClearKey working, I had to adapt them a bit to accomodate Thunder. To explain a bit about the WebKit EME architecture, I must say that there are two layers. The first is the crossplatform one, which implements the W3C API (MediaKeys, MediaKeySession, CDM…). These classes rely on the platform ones (CDMPrivate, CDMInstance, CDMInstanceSession) to handle the platform management, message exchange, etc. which would be the second layer. Apple playback system is fully integrated with their DRM system so they don’t need anything else. We do because we need to integrate our own decryptors to defer to Thunder for decryption so in the GStreamer based ports we also need the CDMProxy related classes, which would be CDMProxy, CDMInstanceProxy, CDMInstanceSessionProxy… The last two extend CDMInstance and CDMInstanceSession respectively to be able to deal with the key management, that is abstracted to the KeyHandle and KeyStore.

Once the abstraction is there (let’s remember that the abstranction works both for Clear Key and Thunder), the Thunder implementation is quite simple, just gluing the CDMProxy, CDMInstanceProxy and CDMInstanceSessionProxy classes to the Thunder system and writing a GStreamer decryptor element for it. I might have made a mistake when selecting the files but considering Thunder classes + the GStreamer common decryptor code, cloc says it is just 1198 lines of platform code. I think it is pretty low for what it does. Apart from that, obviously, there are 5760 lines of crossplatform code.

To build and run all this you need to do several things:

- Build the dependencies with

WEBKIT_JHBUILD=1 JHBUILD_ENABLE_THUNDER="yes"to enable the old fashioned JHBuild build and force it to build the Thunder dependencies. All dependendies are on JHBuild, even Widevine is referenced but to download it you need the proper credentials as it is closed source. - Pass

--thunderwhen callingbuild-webkit.sh. - Run MiniBrowser with

WEBKIT_GST_EME_RANK_PRIORITY="Thunder"and pass parameters--enable-mediasource=TRUE --enable-encrypted-media=TRUE --autoplay-policy=allow. The autoplay policy is usually optional but in this case it is necessary for the YouTube TV tests. We need to give the Thunder decryptor a higher priority because of WebM, that does not specify a key system and without it the Clear Key one can be selected and fail. MP4 does not create trouble because the protection system is specified and the caps negotiation does its magic.

As you could have guessed if you have a closer look at the GStreamer JHBuild moduleset, you’ll see that only Widevine is supported. To support more, you only have to make them build in the Thunder ecosystem and add them to CDMFactoryThunder::supportedKeySystems.

When I coded this, all YouTube TV tests for Widevine were green in the desktop. At the moment of writing this post they aren’t because of some problem with the Widevine installation that will be sorted quickly, I hope.