QEMU 2.6 was released a few days ago. One new feature that I have been working on is the new way to configure I/O limits in disk drives to allow bursts and increase the responsiveness of the virtual machine. In this post I’ll try to explain how it works.

The basic settings

First I will summarize the basic settings that were already available in earlier versions of QEMU.

Two aspects of the disk I/O can be limited: the number of bytes per second and the number of operations per second (IOPS). For each one of them the user can set a global limit or separate limits for read and write operations. This gives us a total of six different parameters.

I/O limits can be set using the throttling.* parameters of -drive, or using the QMP block_set_io_throttle command. These are the names of the parameters for both cases:

| -drive | block_set_io_throttle |

|---|---|

| throttling.iops-total | iops |

| throttling.iops-read | iops_rd |

| throttling.iops-write | iops_wr |

| throttling.bps-total | bps |

| throttling.bps-read | bps_rd |

| throttling.bps-write | bps_wr |

It is possible to set limits for both IOPS and bps at the same time, and for each case we can decide whether to have separate read and write limits or not, but if iops-total is set then neither iops-read nor iops-write can be set. The same applies to bps-total and bps-read/write.

The default value of these parameters is 0, and it means unlimited.

In its most basic usage, the user can add a drive to QEMU with a limit of, say, 100 IOPS with the following -drive line:

-drive file=hd0.qcow2,throttling.iops-total=100

We can do the same using QMP. In this case all these parameters are mandatory, so we must set to 0 the ones that we don’t want to limit:

{ "execute": "block_set_io_throttle",

"arguments": {

"device": "virtio0",

"iops": 100,

"iops_rd": 0,

"iops_wr": 0,

"bps": 0,

"bps_rd": 0,

"bps_wr": 0

}

}

I/O bursts

While the settings that we have just seen are enough to prevent the virtual machine from performing too much I/O, it can be useful to allow the user to exceed those limits occasionally. This way we can have a more responsive VM that is able to cope better with peaks of activity while keeping the average limits lower the rest of the time.

Starting from QEMU 2.6, it is possible to allow the user to do bursts of I/O for a configurable amount of time. A burst is an amount of I/O that can exceed the basic limit, and there are two parameters that control them: their length and the maximum amount of I/O they allow. These two can be configured separately for each one of the six basic parameters described in the previous section, but here we’ll use ‘iops-total’ as an example.

The I/O limit during bursts is set using ‘iops-total-max’, and the maximum length (in seconds) is set with ‘iops-total-max-length’. So if we want to configure a drive with a basic limit of 100 IOPS and allow bursts of 2000 IOPS for 60 seconds, we would do it like this (the line is split for clarity):

-drive file=hd0.qcow2,

throttling.iops-total=100,

throttling.iops-total-max=2000,

throttling.iops-total-max-length=60

Or with QMP:

{ "execute": "block_set_io_throttle",

"arguments": {

"device": "virtio0",

"iops": 100,

"iops_rd": 0,

"iops_wr": 0,

"bps": 0,

"bps_rd": 0,

"bps_wr": 0,

"iops_max": 2000,

"iops_max_length": 60,

}

}

With this, the user can perform I/O on hd0.qcow2 at a rate of 2000 IOPS for 1 minute before it’s throttled down to 100 IOPS.

The user will be able to do bursts again if there’s a sufficiently long period of time with unused I/O (see below for details).

The default value for ‘iops-total-max’ is 0 and it means that bursts are not allowed. ‘iops-total-max-length’ can only be set if ‘iops-total-max’ is set as well, and its default value is 1 second.

Controlling the size of I/O operations

When applying IOPS limits all I/O operations are treated equally regardless of their size. This means that the user can take advantage of this in order to circumvent the limits and submit one huge I/O request instead of several smaller ones.

QEMU provides a setting called throttling.iops-size to prevent this from happening. This setting specifies the size (in bytes) of an I/O request for accounting purposes. Larger requests will be counted proportionally to this size.

For example, if iops-size is set to 4096 then an 8KB request will be counted as two, and a 6KB request will be counted as one and a half. This only applies to requests larger than iops-size: smaller requests will be always counted as one, no matter their size.

The default value of iops-size is 0 and it means that the size of the requests is never taken into account when applying IOPS limits.

Applying I/O limits to groups of disks

In all the examples so far we have seen how to apply limits to the I/O performed on individual drives, but QEMU allows grouping drives so they all share the same limits.

This feature is available since QEMU 2.4. Please refer to the post I wrote when it was published for more details.

The Leaky Bucket algorithm

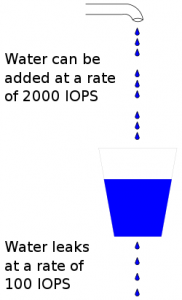

I/O limits in QEMU are implemented using the leaky bucket algorithm (specifically the “Leaky bucket as a meter” variant).

This algorithm uses the analogy of a bucket that leaks water constantly. The water that gets into the bucket represents the I/O that has been performed, and no more I/O is allowed once the bucket is full.

To see the way this corresponds to the throttling parameters in QEMU, consider the following values:

iops-total=100 iops-total-max=2000 iops-total-max-length=60

- Water leaks from the bucket at a rate of 100 IOPS.

- Water can be added to the bucket at a rate of 2000 IOPS.

- The size of the bucket is 2000 x 60 = 120000.

- If iops-total-max is unset then the bucket size is 100.

The bucket is initially empty, therefore water can be added until it’s full at a rate of 2000 IOPS (the burst rate). Once the bucket is full we can only add as much water as it leaks, therefore the I/O rate is reduced to 100 IOPS. If we add less water than it leaks then the bucket will start to empty, allowing for bursts again.

Note that since water is leaking from the bucket even during bursts, it will take a bit more than 60 seconds at 2000 IOPS to fill it up. After those 60 seconds the bucket will have leaked 60 x 100 = 6000, allowing for 3 more seconds of I/O at 2000 IOPS.

Also, due to the way the algorithm works, longer burst can be done at a lower I/O rate, e.g. 1000 IOPS during 120 seconds.

Acknowledgments

As usual, my work in QEMU is sponsored by Outscale and has been made possible by Igalia and the help of the QEMU development team.

Enjoy QEMU 2.6!

Pingback: Links 25/5/2016: Nginx 1.11, F1 2015 Coming to GNU/Linux Tomorrow | Techrights

Great article

Is there a way to monitor the IOP consumption for each individual VM disk using qemu 2.6?

With that, we could have an overview of whats happening and which VM is consuming the most and we can just increase or decrease the IOP for each disk

Thanks!

Yes, you can use the ‘query-blockstats’ command for that.

Hi Berto,

First let me congratulate you about your blog, plenty of interesting information!

I understand the big difference from QEMU 2.4 is the iops-total-max-length parameter, which at the end controls the size of the bucket. What I don’t really understand is that in QEMU 2.4 we already had burst -but no bucket size configuration- do you know how it was behaving in 2.4? I mean, do you know for how long would it allow a burst?

Thanks!

Hi Xavier,

without iops-total-max-length bursts were only allowed for one second, so it’s effectively the same as it is now but with iops-total-max-length always set to 1.

Therefore iops-total=100 and iops-total-max=2000 means that you can perform 2000 I/O operations in one second before being throttled down to 100 IOPS. Due to the way the algorithm works you could also have 1000 operations for two seconds, or ~650 for three seconds, etc., but the hard limit per second is always 2000.

I hope that answers your question!

Hi Berto,

Thank you for this! I have just tried it with Qemu 2.8 (jessie-backports with Debian). I have an interesting situation where i can see it working at boot time (ie, if I throttle it down booting the init takes a long time), but once it is in the guest OS the throttling stops working. This is using xen, with full hypervisor mode.

Any suggestions/advice?

Thanks in advance for your time.

A

Hi Andrew,

when you say it stops working you mean that it I/O runs at full speed, as if no throttling limits were set?

There was a bug some months ago that caused the opposite effect (i.e. no I/O at all, see commit 6bf77e1c2dc24da), but that has already been fixed and as far as I’m aware there are no known similar problems in the throttling code in QEMU 2.8.

I haven’t tried it with Xen, so I cannot help you with that, but if you manage to reproduce the problem using QEMU alone I would be interested to know.

Thanks!

Hi Berto!

Thanks for the quick reply.

By ‘stops working’ I do mean that no throttling happens. I try to throttle it to 1MB/sec eg:

throttling.bps-write=1048576,throttling.bps-read=1048576

and I can still write within the guest VM at many hundreds of MB/sec (sustained, not just in a burst – and many times larger than the ram size, so it is not caching it locally within the guest

Running this version from jessie-backports:

qemu-system 1:2.8+dfsg-3~bpo8+1 amd64 QEMU full system emulation binaries

qemu-block-extra:amd64 1:2.8+dfsg-3~bpo8+1 amd64 extra block backend modules for qemu-system and qemu-utils

Do you have any pointers to testing with QEMU alone? I am struggling to find examples and current documentation. Your site has been helpful!

I should also note that i’m using a ceph rbd device with qemu (I am hoping that this won’t bother the throttling)

Thanks,

Andrew.

Hi again Andrew,

you should be able to test it with QEMU alone with something like this:

qemu-system-x86_64 -M accel=kvm -drive if=virtio,file=hd.qcow2,throttling.bps-write=1048576,throttling.bps-read=1048576

I’m trying that very command line with the same QEMU version as you (not the backport, though) and it works as expected.

Hi Berto.

Setting IO throttle works fine for me, but I have great trouble unsetting IO throttling via QMP.

After studying docs/throttle.txt I thought it should be possible to unset IOThrottle by using

these parameters with block_set_io_throttle command:

{‘iops’: 0, ‘iops_rd’: 0, ‘iops_wr’: 0, ‘bps’: 0, ‘bps_rd’: 0, ‘bps_wr’: 0, ‘iops_max’: 0, ‘iops_max_length’: 1, ‘iops_rd_max’: 0, ‘iops_rd_max_length’: 1, ‘iops_wr_max’: 0, ‘iops_wr_max_length’: 1, ‘bps_max’: 0, ‘bps_max_length’: 1, ‘bps_rd_max’: 0, ‘bps_rd_max_length’: 1, ‘bps_wr_max’: 0, ‘bps_wr_max_length’: 1}

But after using these parameters on already throttled VM, the QEMU crashes or freezes.

I have also tried to set _length parameters to 0 but such a value is not accepted.

When I omit the *_length parameters, the QEMU crashes too. I have tried it on QEMU versions

2.8.1 and 3.1.0 (from Debian distribution).

I can set the throttle values to very high numbers to “unthrottle” the disk, but I don’t want to “pay”

the overhead of the throttling algorithm.

Is there any way how to safely unthrottle a disk throttled by the block_set_io_throttle command?

Hello, QEMU should not crash or freeze when you do that. Can you please file a bug report with all those details here?

https://gitlab.com/qemu-project/qemu/-/issues

Thanks!

Pingback: "leaky bucket" style disk io throttling using linux kernel - Boot Panic