Chromium 109 (released January 10, 2023) has dropped support for xdg_shell_unstable_v6, one of Wayland shell protocols.

For years, the Chromium’s Wayland backend was aware of two versions of the XDG shell interface. There were two parallel implementations of the client part, similar like twins. In certain parts, the only difference was names of functions. Wayland has a naming convention that requires these names to start with the name of the interface they work with, so where one implementation would call xdg_toplevel_resize(), the other one would call zxdg_toplevel_v6_resize().

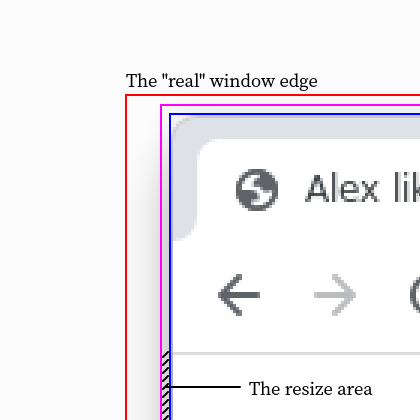

The shell is a now standard Wayland protocol extension that describes semantics for the “windows”. It does so by assigning “roles” to the Wayland surfaces, and by providing means to set relative positions of these surfaces. That altogether makes it possible to build tree-like hierarchies of windows, which is absolutely necessary to render a window that has a menu with submenus.

The XDG shell protocol was introduced in Wayland protocols a long time ago. The definition file mentions 2008, but I was unable to track its history back that far. The Git repository where these files are hosted these days was created in October 2015, and the earlier history is not preserved. (Maybe the older repository still exists somewhere?)

Back in 2015, the XDG shell interface was termed “unstable”. Wayland waives backwards compatibility for unstable stuff, and requires the versions to be explicitly mentioned in the names of protocols and their definition files. Following that rule, the XDG shell protocol was named xdg_shell_unstable_v5. Soon after the initial migration of the project, the version 6 was introduced, named xdg_shell_unstable_v6. Since then, there are two manifests in the unstable section, xdg-shell-unstable-v5.xml and xdg-shell-unstable-v6.xml. I assume versions prior to 5 were dropped at the migration.

This may be confusing, so let me clarify. Wayland defines several entities to describe stuff. A protocol is a grouping entity: it is the root element of the XML file where it is defined. A protocol may contain a number of interfaces that describe behaviour of objects. The real objects and calls are bound to interfaces, but the header files are generated for the protocol as a whole, so even if the client uses only one interface, it will import the whole protocol.

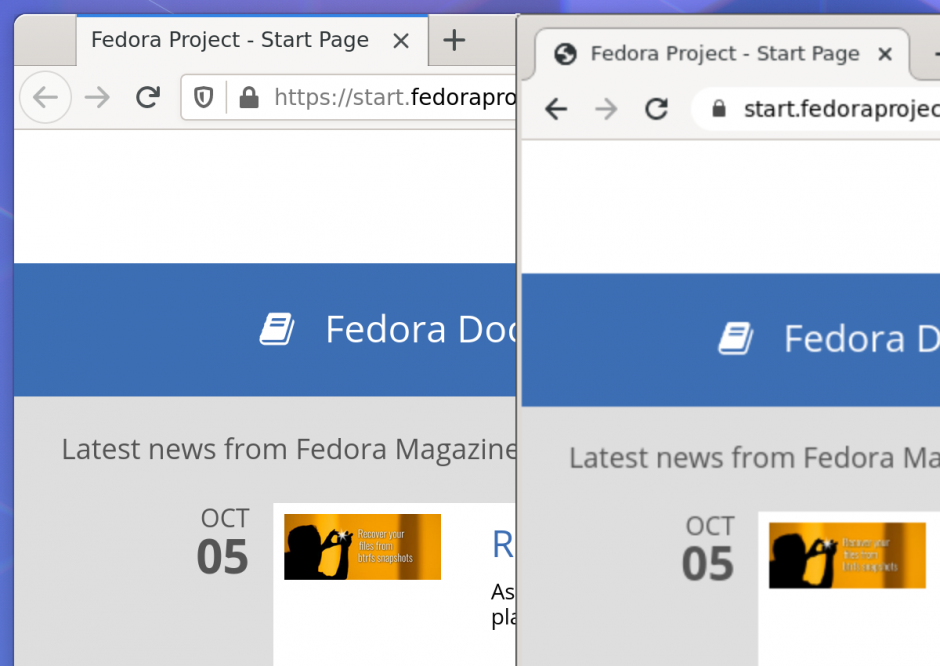

The XDG shell allows the application to, quoting its description, “create desktop-style surfaces”. A number of desktop environments implemented support for the unstable protocol, providing feedback and proposing improvements. In 2017, the shell protocol was declared stable, and got a new name: xdg_shell. The shell interface was renamed from zxdg_shell_v6 to xdg_wm_base. Since then, the clients had to choose between two protocols, or to support both.

For the stable stuff, the convention is simpler and easier to follow: the changes are assumed to be backwards compatible, so names of interfaces and protocols are fixed and no longer change. The version tracking is built into the protocol definition and uses the self-explanatory version and since attributes. The client can import one protocol and decide at run time which calls are possible and which are not depending on the version exposed by the compositor.

The stable version continued to evolve. Eventually, the unstable version became redundant, and in 2022 major Wayland compositors started to drop support for it. By November in the past year we realised that the code that implemented the unstable shell protocol in Chromium was no longer used by real clients. Of 30 thousand recorded uses of the Wayland backend, only 3 (yeah, just three) used the unstable interface. The time had come to get rid of the legacy, which was more than one thousand lines of code.

Some bits of the support for alternative shell interfaces is preserved, though. A special factory class named ui::ShellObjectFactory is used to hide the implementation details of the shell objects. Before getting rid of the unstable shell implementation, the primary use of the factory was to select the XDG shell interface supported by the host. However, it also made it possible to integrate easily with the alternative shells. For that purpose, mainly for the downstream projects, the factory will remain.