In this post I am going to talk about the implementation of the Media Source Extensions (known as MSE) in the WebKit ports that use GStreamer. These ports are WebKitGTK+, WebKitEFL and WebKitForWayland, though only the latter has the latest work-in-progress implementation. Of course we hope to upstream WebKitForWayland soon and with it, this backend for MSE and the one for EME.

My colleague Enrique at Igalia wrote a post about this about a week ago. I recommend you read it before continuing with mine to understand the general picture and the some of the issues that I managed to fix on that implementation. Come on, go and read it, I’ll wait.

One of the challenges here is something a bit unnatural in the GStreamer world. We have to process the stream information and then make some metadata available to the JavaScript app before playing instead of just pushing everything to a playing pipeline and being happy. For this we created the AppendPipeline, which processes the data and extracts that information and keeps it under control for the playback later.

The idea of the our AppendPipeline is to put a data stream into it and get it processed at the other side. It has an appsrc, a demuxer (qtdemux currently) and an appsink to pick up the processed data. Something tricky of the spec is that when you append data into the SourceBuffer, that operation has to block it and prevent with errors any other append operation while the current is ongoing, and when it finishes, signal it. Our main issue with this is that the the appends can contain any amount of data from headers and buffers to only headers or just partial headers. Basically, the information can be partial.

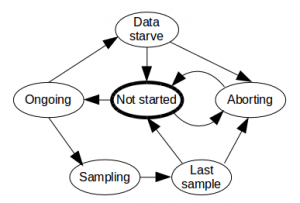

First I’ll present again Enrique’s AppendPipeline internal state diagram:

First let me explain the easiest case, which is headers and buffers being appended. As soon as the process is triggered, we move from Not started to Ongoing, then as the headers are processed we get the pads at the demuxer and begin to receive buffers, which makes us move to Sampling. Then we have to detect that the operation has ended and move to Last sample and then again to Not started. If we have received only headers we will not move to Sampling cause we will not receive any buffers but we still have to detect this situation and be able to move to Data starve and then again to Not started.

Our first approach was using two different timeouts, one to detect that we should move from Ongoing to Data starve if we did not receive any buffer and another to move from Sampling to Last sample if we stopped receiving buffers. This solution worked but it was a bit racy and we tried to find a less error prone solution.

We tried then to use custom downstream events injected from the source and at the moment they were received at the sink we could move from Sampling to Last sample or if only headers were injected, the pads were created and we could move from Ongoing to Data starve. It took some time and several iterations to fine tune this but we managed to solve almost all cases but one, which was receiving only partial headers and no buffers.

If the demuxer received partial headers and no buffers it stalled and we were not receiving any pads or any event at the output so we could not tell when the append operation had ended. Tim-Philipp gave me the idea of using the need-data signal on the source that would be fired when the demuxer ran out of useful data. I realized then that the events were not needed anymore and that we could handle all with that signal.

The need-signal is fired sometimes when the pipeline is linked and also when the the demuxer finishes processing data, regardless the stream contains partial headers, complete headers or headers and buffers. It works perfectly once we are able to disregard that first signal we receive sometimes. To solve that we just ensure that at least one buffer left the appsrc with a pad probe so if we receive the signal before any buffer was detected at the probe, it shall be disregarded to consider that the append has finished. Otherwise, if we have seen already a buffer at the probe we can consider already than any need-data signal means that the processing has ended and we can tell the JavaScript app that the append process has ended.

Both need-data signal and probe information come in GStreamer internal threads so we could use mutexes to overcome any race conditions. We thought though that deferring the operations to the main thread through the pipeline bus was a better idea that would create less issues with race conditions or deadlocks.

To finish I prefer to give some good news about performance. We use mainly the YouTube conformance tests to ensure our implementation works and I can proudly say that these changes reduced the time of execution in half!

That’s all folks!