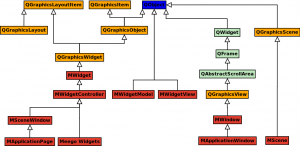

In case you’re not familiar with WebKit‘s multiprocess model, allow me to explain some basics: In WebKitGTK and WPE we currently have three main processes which collaborate in order to ultimately render the web pages (this is subject to change, probably with the addition of new processes):

- The UI process: this is the process of the application that instantiates the web view. For example, Cog when using WPE or Epiphany when using WebKitGTK. This process is usually in charge of interacting with the user/application and send events and requests to the other processes.

- The network process: the goal of this process is to perform network requests for the different web processes and make the results available to them.

- The web process: this is the process that really performs the heavy lifting. It’s in charge of requesting the resources required by the page (through the network process), create the DOM tree, execute the JS code, render the content, respond to user events, etc.

In a simple case, we would have a UI process, a web process and a network process working together to render a page. There are situations where (due to security reasons, performance, build options, etc.) the same UI process can be using more than a single web process at the same time, while these share the network process. I won’t go into details about all the possibilities here because that’s beyond the goal of this post (but it’s a good idea for a future post!). If you want more information related to this you can search for topics like process prewarm, process swap on navigation or service workers running on a dedicated processes. In any case, at any given moment, one of those web processes is always the main one performing all the tasks mentioned before, and the others are helpers for concrete tasks, so the situation is equivalent to the simpler case of a single UI process, a web process and an network process.

Developers know that processes have the bad habit of getting hung sometimes, and that can happen here as well to any of the processes. Unfortunately, in many cases that’s probably due to some kind of bug in the code executed by the processes and we can’t do much more about that than fixing the bug. But there’s a special kind of block that affects the web process only, and it’s related to the execution of JS code. Consider this simple JS script for example:

setTimeout(function() {

while(true) { }

}, 1000);

This will create an infinite loop during the JS execution after one second, blocking the main thread of the web process, and taking 100% of the execution time. The main thread, besides executing the JS code, is also in charge of processing incoming events or messages from the UI process, perform the layout, initiate redraws of the page, etc. But as it’s locked in the JS loop, it won’t be able to perform any of those tasks, causing the web process to become unresponsive to user input or API calls that come through the UI process. There are other situations that could make the process unresponsive, but the execution of faulty JS is probably the most common one.

WebKit has some internal tools to detect these situations, that mainly consist on timeouts that mark the process as unresponsive when some expected reply is not received in time from the web process. But on WebKitGTK and WPE there wasn’t a way to notify the application that’s using the web view that the web process had become unresponsive, or a way to recover from this situation (other than closing the application or the tab). This is fixed in trunk for both ports by adding two components to the WebKitWebView class:

- A new property named is-web-process-responsive: this property will signal responsiveness changes of the web process. The application using the web view can connect to the notifications of the object property as with any other GObject class, and can also get the its value using the new webkit_web_view_get_is_web_process_responsive() API method.

- A new API method webkit_web_view_terminate_web_process() that can be used kill the web process when it becomes unresponsive (but will also work with responsive ones). Any load performed after this call will spawn a new and shiny web process. When this method is used to kill a web process, the web view will emit the signal web-process-terminated with WEBKIT_WEB_PROCESS_TERMINATED_BY_API as the termination reason.

With these changes, the browser can now connect to the is-web-process-responsive signal changes. If the web process becomes unresponsive, the browser can launch a dialog informing the user of the problem, and ask whether to wait or kill the process. If the user chooses to kill the process, the browser can use webkit_web_view_terminate_web_process() to kill the problematic process and then reload (or load a new page) to go back to functional state.

These changes, among other wonderful features we’re developing at Igalia, will be available on the upcoming 2.34 release of WebKitGTK and WPE. I hope they are useful for you 🙂

Happy coding!