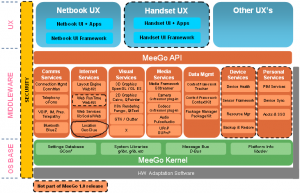

As you probably know, GeoClue is part of the Meego architecture as the Geolocation component. However, current plans are using the QtMobility API for UI applications and defining GeoClue as one of the available backends.

The QtMobility software implements a set of APIs to ease the development of UI software focused on mobile devices. It provides some interesting features and tools for a great variety of mobile oriented development areas:

- Contacts

- Bearer (Network Management)

- Location

- Messaging

- Multimedia

- Sensors

- Service Framework

- System Information

All those software pieces are a kind of abstraction to expose easy and comprehensive API’s to be used in the UI application developments. In regard to Geolocation, lets describe in detail the Location component.

It was recently announced the first public implementation of a GeoClue based backend for the QtMobility Location API. The starting point to implement the GeoClue backend, as described in the QtMobility documentation, is the QGeoPositionInfoSource abstract class. The implementation of this abstract class using GeoClue seems not too hard, however, the current GeoClue architecture has some limitations to fulfill the QtMobility specifications:

- The QtGeoPositionInfo class, defined for storing the Geolocation data retrieved by the selected backend (GeoClue in this case) manages together global location, direction and velocity.

- The GeoClue API has separated methods and classes for location, address and velocity. Independent signals are emitted whenever such parameters are changed.

- The GeoClue Velocity interface is not implemented in the GeoClue Master provider.

- Even though is not too hard to implement the abstract methods of the QGeoPositionInfoSource class, the start/stop updating methods are not very efficient in regard to battery and memory consumption. There is not easy or direct way to remove one provider when is not used.

As part of the Igalia’s plans on Meego, I’ve been working in the implementation of such GeoClue based backend for the Meego QtMobility framework. Now that part of my work has been already done, it’s time to share efforts and contribute to the public repository with some patches and performance reports I’ve got during the last months. Some work is still needed before releasing my work, but I hope I will be able to send something in the following weeks, so stay tunned.

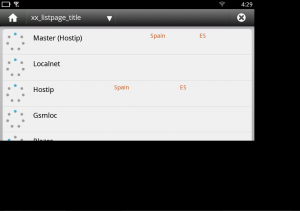

Even though the code is not ready for being public, I could show a snapshots of the test application I implemented for the Meego Handset platform using the Meego Touch framework:

The purpose of this application would be monitoring the DBus communication between the different location providers, creating some performance tests and evaluating the impact on a Mobile platform.