Structure of Chrome iOS (w/blink)

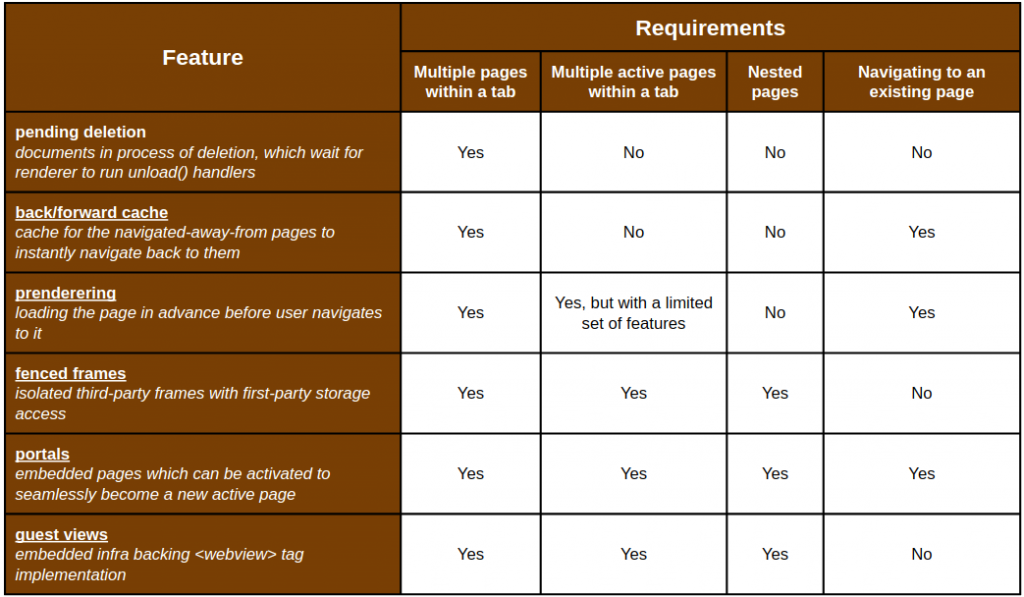

First, let’s look at the overall structure of Chrome iOS. This simple diagram illustrates the structure of Chrome iOS.The UI components are placed in the //ios/chrome directory, which functions similarly to the //chrome layer in other implementations. The //ios/web layer also provides APIs for its initialization, content navigation, presenting UI callbacks, saving or restoring navigation sessions and browser data, and more. The //ios/web/public defines the public APIs, while various other directories within //ios/web implement them. The implementation of these public APIs invokes WebKit APIs to provide the necessary functionalities.

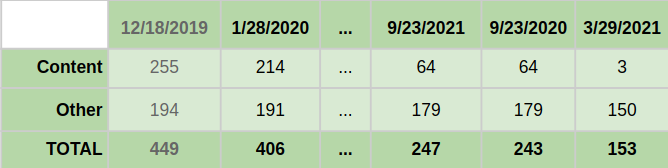

For Chrome iOS based on Blink, the developers decided to reuse the existing iOS Chrome UI implementation last year. Thus, one of the main developments of Chrome iOS based on Blink happened in the //ios/web/content directory which is the rectangle filled by a green color, created to implement the public APIs using Blink. As you can see in the below diagram, they added the content directory to //ios/web directory and implemented the public APIs using Blink’s content APIs. Notably, they’ve introduced a ContentWebState as a prototype implementation of WebState to replace the class in //ios/web/content/web_state. However, as shown in the diagram, the directory currently only includes in five components: web_state, navigation, UI, init, and js_messaging. Therefore, more components need to be implemented for Chrome iOS on Blink.

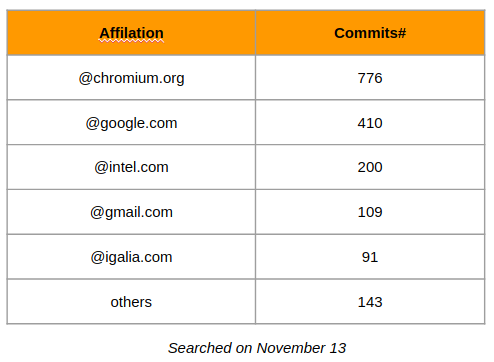

Igalia Contributions

Igalia has been contributing to this project since the project was made public by the community. We’ve worked mainly on UI related components e.g. file and color chooser, context menu, select list popup and integration with Chrome IOS UI. The pictures below are the screen captures of the chooser implementations that we contributed.We also helped with the features of multimedia such as the video screen capture, hardware encoding/decoding, and audio recording.

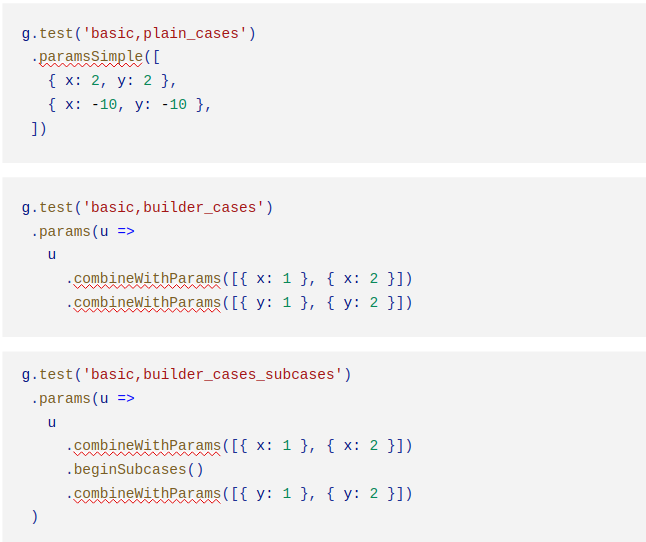

We’ve worked on a few testing frameworks (e.g. unit tests, browser and web tests). Also, we’ve filtered out unsupported tests and failed tests. Specifically, we’ve implemented the infrastructure to run the web tests on the simulator. For your information, the test coverage of the web test on iOS Blink was about 72.2% and the pass ratio among the working tests was 91.83% at the beginning of this year. Then we’ve been maintaining the bot for Chrome iOS on Blink to keep the build and testing because keeping build and running tests are crucial for Blink to bring up to Chrome iOS.

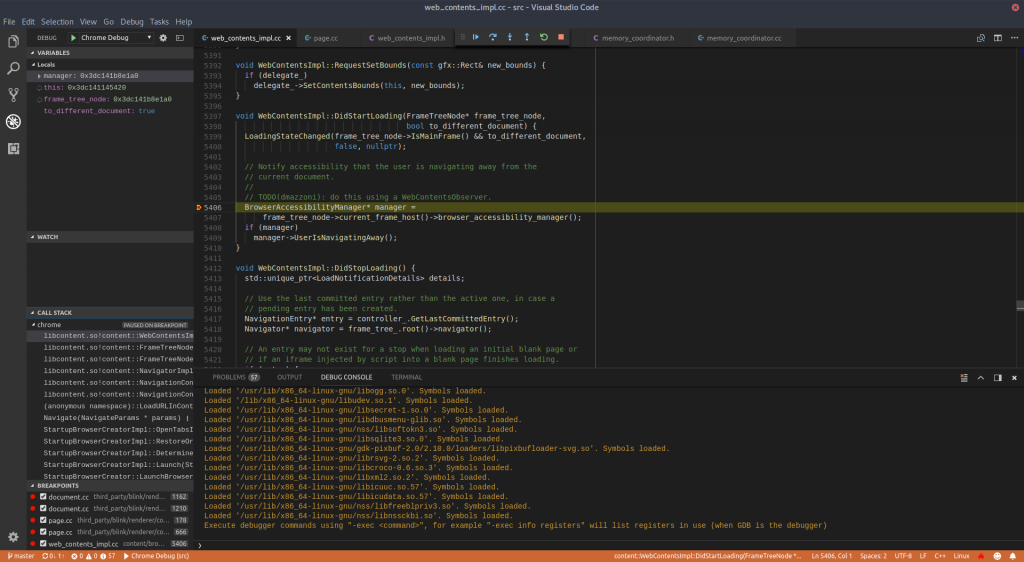

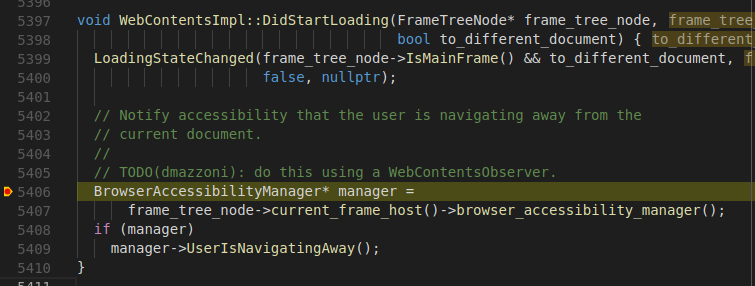

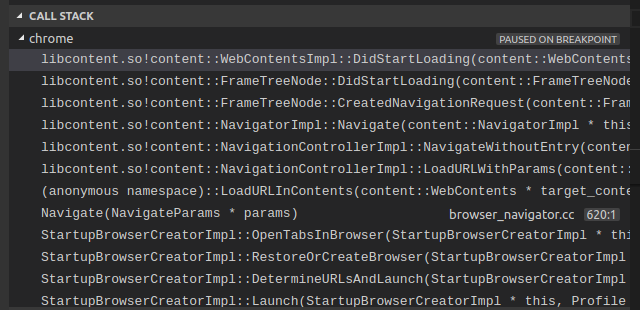

Besides we supported the remote debugging with DevTools on Blink for iOS. Now developers are able to remotely use DevTools in a host machine (e.g. Mac) and inspect Chrome or Content Shell for development.

Moreover, we’ve worked on graphics stuff related to compositing and rendering. For instance, we supported the metal on ANGLE as well as fixed bugs in the graphics layers.

You can find the detailed contributions patch list here.

The major changes for H1 2024

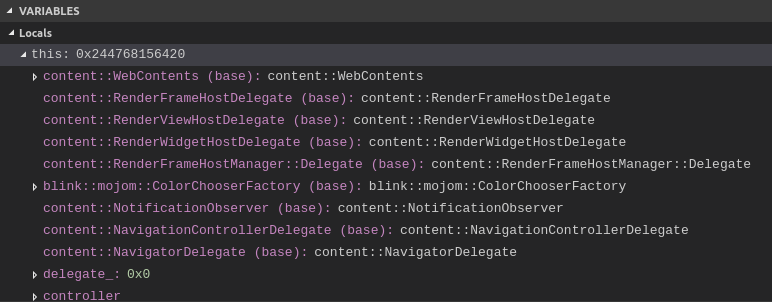

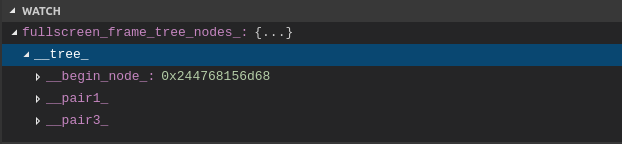

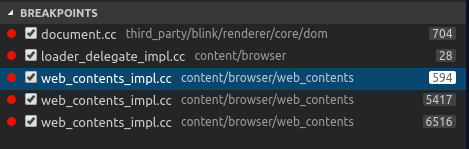

Now, let’s review the major changes during the first half of this year. Firstly, the minimum iOS SDK version was bumped up to 17.4 which supports the BrowserEngineKit library. And the [browser/unit] tests began to run on the ios-blink bot with the 17.4 SDK.By reusing the existing iOS Chrome UI, ContentWebState was introduced as a prototype implementation of WebState. During H1 2024, new methods were added further or previously empty methods were implemented. For example, GetVirtualKeyboardHeight, OnVisibilityChanged, DidFinishLoad, DidFailLoad methods were implemented.

BrowserEngineKit APIs that were announced by Apple to support third-party browser engines on iOS and iPadOS have been applied to JIT, GPU, network, and content process creation.

As mentioned above, Igalia implemented a color chooser, a file chooser, and a context menu during the period.

Lastly, the package size was reduced by removing duplicated resources.

Remaining Tasks

We’ve briefly looked at the current status of the project so far, but many functionalities still need to be supported. For example, regarding UI features, functionalities such as printing preview, download, text selection, request desktop site, zoom text, translate, find in page, and touch events are not yet implemented or are not functioning correctlyMoreover, there are numerous failing or skipped tests in unit tests, browser tests, and web tests. Ensuring that these tests are enabled and passing the test should also be a key focus moving forward.

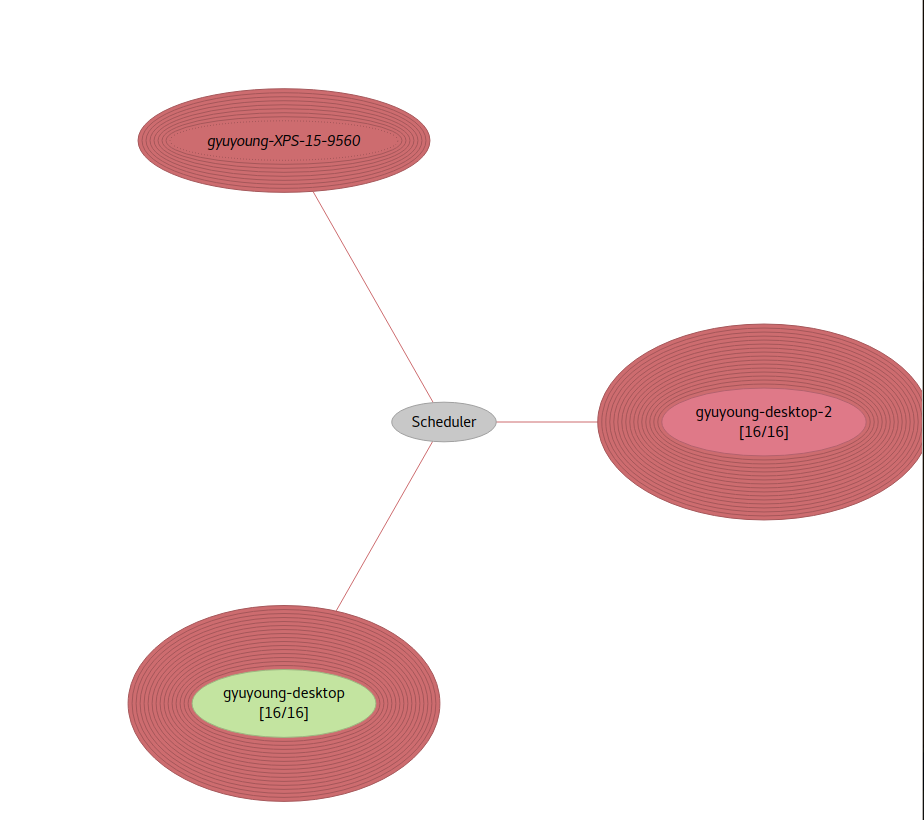

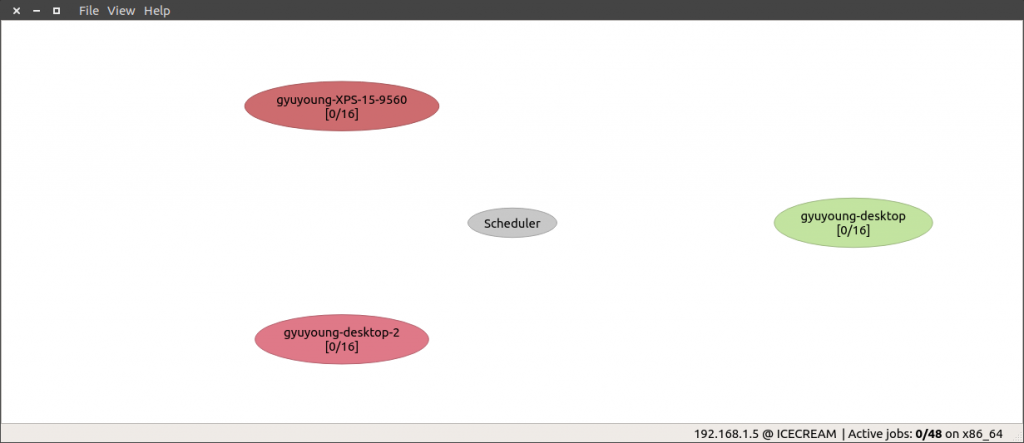

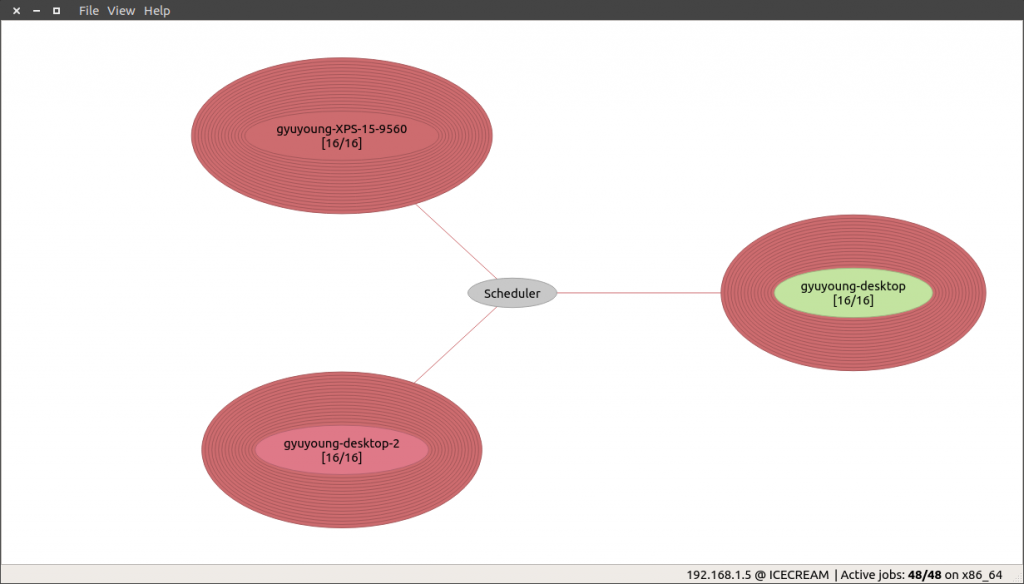

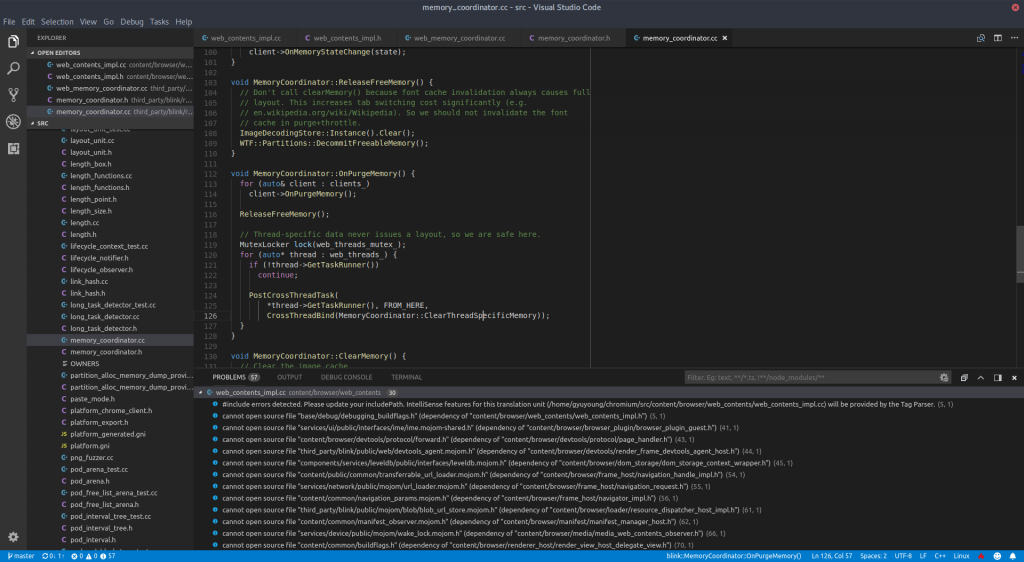

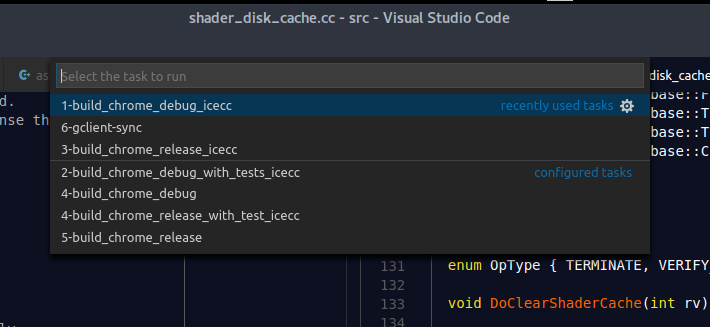

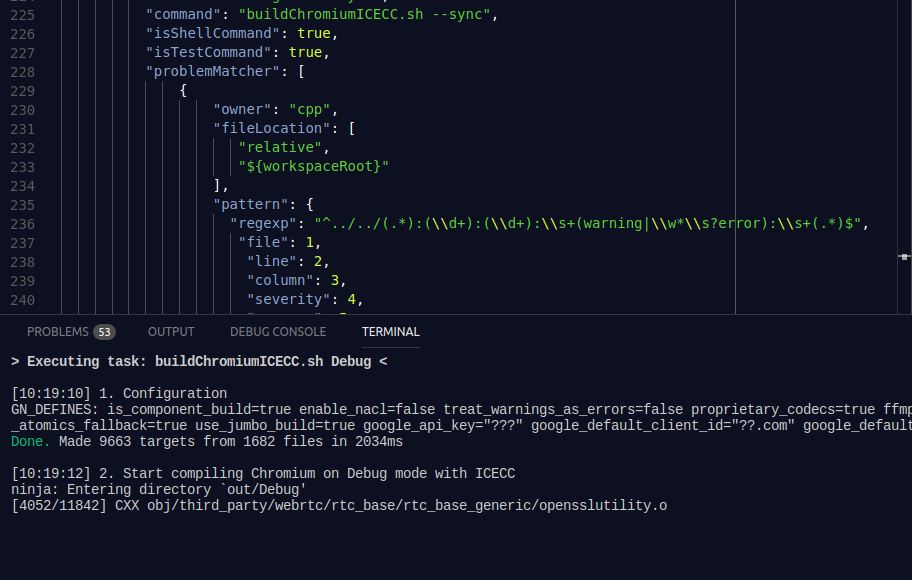

On the ICECC monitor, you can see that VS Code builds Chromium by using ICECC.

On the ICECC monitor, you can see that VS Code builds Chromium by using ICECC.