You probably know Grilo. It’s a GLib based framework created to ease the task of searching media to application developers. A couple of weeks ago I checked its code a bit and I really liked its plugin based design, and how easy to use it was. And I wanted to test it, of course 🙂

Some of my colleagues had already created some examples with Grilo, like Iago adding it to Totem or José creating a clutter based player. I wanted to do something different, and as I live between the Qt and the Gnome world, I decided to develop a Qt based Grilo application that used Phonon to play the media found by Grilo. If you are interested in the full source, you can clone my public repository at Igalia (http://git.igalia.com/user/magomez/qtgrilo.git).

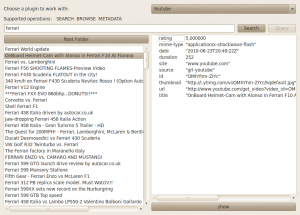

So, step one: create the UI. I got a bit of inspiration from the grilo-test-ui program available with the Grilo sources, and this was the result:

You can choose the plugin to use from the combobox on the top. When a plugin is selected, the operations supported by the plugin are indicated. In the image, the Youtube plugin supports searching, browsing and metadata operations.

The bottom-left list shows the available media. It can be the result of a search, query or browse operation. Double clicking on a list item will browse it if it’s a container or show its metadata if it’s a media file. If the selected media contains an URL field, it will be played by pressing the Show button.

Step two: connect Grilo and the UI. This is really simple. Just add the plugins configuration to the plugin registry (for example the youtube one):

config = grl_config_new ("grl-youtube", NULL);

grl_config_set_api_key (config, YOUTUBE_KEY);

grl_plugin_registry_add_config (registry, config);

Then ask the registry about the available sources and add them to the plugins combo so the user can select one:

GrlMediaPlugin **sources;

sources = grl_plugin_registry_get_sources(registry,

FALSE);

int i = 0;

while (sources[i]) {

pluginCombo->

addItem(grl_metadata_source_get_name(GRL_METADATA_SOURCE(sources[i])),

qVariantFromValue(sources[i]));

i++;

}

g_free(sources);

Be careful here, as in order to store non Qt typed pointers in QVariants, you need to declare them first, so Qt knows about those types. In this code, I defined the 2 types I stored into QVariants:

Q_DECLARE_METATYPE(GrlMediaPlugin*) Q_DECLARE_METATYPE(GrlMedia*)

And that’s it.

Then, when the user selects a source, ask for its supported operations. If browsing is supported, do it to fill the browser list with the root folder contents:

GrlSupportedOps ops;

ops = grl_metadata_source_supported_operations(GRL_METADATA_SOURCE(currentPlugin));

if (ops & GRL_OP_SEARCH) {

opsString += " SEARCH";

searchButton->setEnabled(true);

} else {

searchButton->setEnabled(false);

}

if (ops & GRL_OP_QUERY) {

opsString += " QUERY";

queryButton->setEnabled(true);

} else {

queryButton->setEnabled(false);

}

if (ops & GRL_OP_BROWSE) {

opsString += QString(" BROWSE");

browseButton->setEnabled(true);

browse();

} else {

browseButton->setEnabled(false);

}

if (ops & GRL_OP_METADATA) {

opsString += " METADATA";

} else {

}

operationsLabel->setText(opsString);

Step three: implement the browse, search and query operations. Again, this is a simple process once you understand how Grilo works, and the implementation of the three operations is quite similar. Basically, you need to call grl_media_source_search/query/browse. Besides some flags and parameters for the search, you indicate the string to search/query or the container to browse, and the callback operation. This callback is called whenever a result arrives, and inside you must decide whether to launch a new search/query/browse to get the next chunk of results. Of course, besides this, I’ve added the obtained media to the browser list, so the user can interact with it.

As I explained in my last post, a class method can be used as a GObject signal callback, so this is what I did here as well. When callback method is called, it receives the class instance as the user_data parameter, so from inside the class method I can call the instance method I need. As an example, these are the 3 functions that implement the search operation: search() is the one called to start the operation, searchFinishedCB is the result callback, and searchMethod is the instance methos that adds the result to the browser and launches the search again if needed.

void TestWindow::search()

{

cancelCurrentOperation();

clearBrowser();

string = g_strdup(searchEdit->text().toLatin1());

currentOpId = grl_media_source_search(GRL_MEDIA_SOURCE(currentPlugin),

string,

grl_metadata_key_list_new(GRL_METADATA_KEY_ID,

GRL_METADATA_KEY_TITLE,

GRL_METADATA_KEY_CHILDCOUNT,

NULL),

0,

BROWSE_CHUNK_SIZE,

(GrlMetadataResolutionFlags)BROWSE_FLAGS,

searchFinishedCB,

this);

}

void TestWindow::searchFinishedCB(GrlMediaSource *source,

guint search_id,

GrlMedia *media,

guint remaining,

gpointer user_data,

const GError *error)

{

if (!error && media) {

TestWindow *win = (TestWindow*)user_data;

win->searchFinished(search_id, media, remaining);

}

}

void TestWindow::searchFinished(guint search_id,

GrlMedia *media,

guint remaining)

{

QString name(grl_media_get_title(media));

QStandardItem *item = new QStandardItem();

if (GRL_IS_MEDIA_BOX(media)) {

QFont font;

font.setBold(true);

item->setFont(font);

gint children = grl_media_box_get_childcount(GRL_MEDIA_BOX(media));

if (children == GRL_METADATA_KEY_CHILDCOUNT_UNKNOWN) {

name += QString(" (?)");

} else {

name += QString(" (%1)").arg(children);

}

}

item->setText(name);

item->setData(qVariantFromValue(media));

item->setEditable(false);

browseModel->appendRow(item);

operationResults++;

if (remaining == 0) {

operationOffset += operationResults;

if (operationResults >= BROWSE_CHUNK_SIZE &&

operationOffset < BROWSE_MAX_COUNT) {

operationResults = 0;

/* relaunch search */

currentOpId =

grl_media_source_search(GRL_MEDIA_SOURCE(currentPlugin),

string,

grl_metadata_key_list_new(GRL_METADATA_KEY_ID,

GRL_METADATA_KEY_TITLE,

GRL_METADATA_KEY_CHILDCOUNT,

NULL),

operationOffset,

BROWSE_CHUNK_SIZE,

(GrlMetadataResolutionFlags)BROWSE_FLAGS,

searchFinishedCB,

this);

}

}

}

After implementing the search, query and browse operations, I implemented the metadata operation as well. So when the user selects and element in the browser, its metadata is retrieved and shown. Its implementation is quite similar to the browse/query/search operations as well, but it doesn’t need to be relaunched as them.

So, the next and final step was playing the media. I started with videos and audio, as using Phonon it was really easy:

void TestWindow::playVideo()

{

Phonon::VideoWidget *videoWidget = new Phonon::VideoWidget();

videoWidget->setAttribute(Qt::WA_DeleteOnClose);

Phonon::MediaObject *mediaObject = new Phonon::MediaObject(videoWidget);

mediaObject->setCurrentSource(Phonon::MediaSource(QUrl(QString::fromUtf8(grl_media_get_url(currentMedia)))));

Phonon::AudioOutput *audioOutput = new Phonon::AudioOutput(Phonon::VideoCategory, videoWidget);

Phonon::createPath(mediaObject, audioOutput);

Phonon::createPath(mediaObject, videoWidget);

videoWidget->show();

mediaObject->play();

}

void TestWindow::playAudio()

{

QMessageBox msgBox;

msgBox.setText(QString("Playing %1").arg(grl_media_get_title(currentMedia)));

Phonon::MediaObject *mediaObject = new Phonon::MediaObject(&msgBox);

mediaObject->setCurrentSource(Phonon::MediaSource(QUrl(QString::fromUtf8(grl_media_get_url(currentMedia)))));

Phonon::AudioOutput *audioOutput = new Phonon::AudioOutput(Phonon::MusicCategory, &msgBox);

createPath(mediaObject, audioOutput);

mediaObject->play();

msgBox.exec();

}

Understanding how Phonon works is quite easy when you have learned to use GStreamer first, as the concepts are almost the same (despite Phonon is far easier to use). Basically you need to create a MediaObject and tell it where to get the data. Then create an AudioOutput for the audio, a VideoWidget for the video, connect them and then set the playing state. I was a bit short of time to implement a more featured player, but I wanted to provide a way to stop the playback once started, so it’s stopped when the output window (if it’s a video) or the dialog with the title (if it’s an audio file) are closed.

And finally, the image files. Opening them when they were local files is trivial. But when they are remote ones, you need to download them first. In order to do so, you to use a QNetworkAccessManager, which is the class in charge of the network access. Just use its get() to make a request, and it will notify with a signal when the data has arrived, as you can see in the code:

netManager = new QNetworkAccessManager(this);

connect(netManager, SIGNAL(finished(QNetworkReply*)),

this, SLOT(netRequestFinished(QNetworkReply*)));

...

void TestWindow::playImage()

{

QUrl url(QString::fromUtf8(grl_media_get_url(currentMedia)));

if (url.scheme() == "file") {

QScrollArea *area= new QScrollArea();

area->setAttribute(Qt::WA_DeleteOnClose);

QLabel *label = new QLabel();

label->setPixmap(QPixmap(url.path()));

area->setWidget(label);

area->show();

} else {

netManager->get(QNetworkRequest(url));

}

}

...

void TestWindow::netRequestFinished(QNetworkReply *reply)

{

QScrollArea *area= new QScrollArea();

area->setAttribute(Qt::WA_DeleteOnClose);

QLabel *label = new QLabel();

QPixmap pix;

pix.loadFromData(reply->readAll());

label->setPixmap(pix);

area->setWidget(label);

area->show();

reply->deleteLater();

}

And that’s all… well, almost all… for some reason I haven’t found yet, opening youtube videos is not working, despite I’ve checked the URLs and the videos work if they are atored in the computer… I might be a bug with Phonon but I haven’t found it yet…

As you can see, using Grilo is really easy… even if you decide to mix it with Qt! 🙂