GStreamer VA-API 1.14: what's new?

As you may already know, there is a new release of GStreamer, 1.14. In this blog post we will talk about the new features and improvements of GStreamer VA-API module, though you have a more comprehensive list of changes in the release notes.Most of the topics explained along this blog post are already mentioned in the release notes, but a bit more detailed.

DMABuf usage #

We have improved DMABuf usage, mostly at downstream.

In the case of upstream, we just got rid a nasty hack which detected when to instantiate and use a buffer pool in sink pad with a dma-buf based allocator. This functionality has been already broken for a while, and that code was the wrong way to enabled it. The sharing of a dma-buf based buffer pool to upstream is going to be re-enabled after bug 792034 is merged.

For downstream, we have added the handling of memory:DMABuf caps feature. The

purpose of this caps feature is to negotiate a media when the buffers are

not map-able onto user space, because of digital rights or platform

restrictions.

For example, currently

intel-vaapi-driver doesn’t allow

the mapping of its produced dma-buf descriptors. But, as we cannot know if a

back-end produces or not map-able dma-buf descriptors, gstreamer-vaapi, when

the allocator is instantiated, creates a dummy buffer and tries to map it, if it

fails, memory:DMABuf caps feature is negotiated, otherwise, normal video caps

are used.

VA-API usage #

First of all, GStreamer VA-API has support now for libva-2.0, this means VA-API 1.10. We had to guard some deprecated symbols and the new ones. Nowadays most of distributions have upgraded to libva-2.0.

We have improved the initialization of the VA display internal structure

(GstVaapiDisplay). Previously, if a X based display was instantiated,

immediately it tried to grab the screen resolution. Obviously, this broke the

usage of headless systems. We just delay the screen resolution check to when the

VA display truly requires that information.

New API were added into VA, particularly for log handling. Now it is possible to redirect the log messages into a callback. Thus, we use it to redirect VA-API message into the GStreamer log mechanisms, uncluttering the console’s output.

Also, we have blacklisted, in autoconf and meson, libva version 0.99.0, because that version is used internally by the closed-source version of Intel MediaSDK, which is incompatible with official libva. By the way, there is a new open-source version of MediaSDK, but we will talk about it in a future blog post.

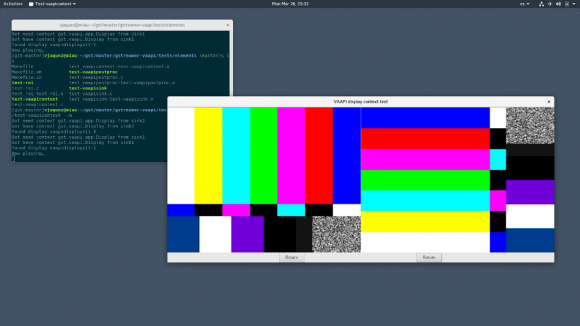

Application VA Display sharing #

Normally, the object GstVaapiDisplay is shared among the pipeline through the

GstContext

mechanism. But this class is defined internally and it is not exposed to users

since release 1.6. This posed a problem when an application wanted to create its

own VA Display and share it with an embedded pipeline. The solution is a new

context application message: gst.vaapi.app.Display, defined as a

GstStructure with two fields: va-display with the application’s vaDisplay,

and x11-display with the application’s X11 native

display. In the future, a

Wayland’s native handler will be processed too. Please note that this context

message is only processed by vaapisink.

One precondition for this solution was the removal of the VA display cache mechanism, a lingered request from users, which, of course, we did.

Interoperability with appsink and similar #

A hardware accelerated driver, as the Intel one, may have custom offsets and

strides for specific memory regions. We use the GstVideoMeta to set this

custom values. The problem comes when downstream does not handle this meta, for

example, appsink. Then, the user expect the “normal” values for those

variable, but in the case of GStreamer VA-API with a hardware based driver, when

the user displays the frame, it is shown corrupted.

In order to fix this, we have to make a memory copy, from our custom VA-API images to an allocated system memory. Of course there is a big CPU penalty, but it is better than delivering wrong video frames. If the user wants a better performance, then they should seek for a different approach.

Resurrection of GstGLTextureUploadMeta for EGL renders #

I know, GstGLTextureUploadMeta must die, right? I am convinced of it. But, Clutter video sink uses it, an it has a vast number of users, so we still have to support it.

Last release we had remove the support for EGL/Wayland in the last minute because we found a terrible bug just before the release. GLX support has always been there.

With Daniel van Vugt efforts, we resurrected the support for that meta in EGL.

Though I expect the replacement of Clutter sink with glimagesink someday,

soon.

vaapisink demoted in Wayland #

vaapisink was demoted to marginal rank on Wayland because COGL cannot display

YUV surfaces.

This means, by default, vaapisink won’t be auto-plugged when playing in

Wayland.

The reason is because Mutter (aka GNOME) cannot display the frames processed by

vaapisink in Wayland. Nonetheless, please note that in Weston, it works just

fine.

Decoders #

We have improved a little bit upstream renegotiation: if the new stream is compatible with the previous one, there is no need to reset the internal parser, with the exception of changes in codec-data.

low-latency property in H.264 #

A new property has added only to H.264 decoder: low-latency. Its purpose is

for live streams that do not conform the H.264 specification (sadly there are

many in the wild) and they need to twitch the spec implementation. This property

force to push the frames in the decoded picture buffer as soon as possible.

base-only property in H.264 #

This is the result of the Google Summer of Code 2017, by Orestis Floros. When this property is enabled, all the MVC (Multiview Video Coding) or SVC (Scalable Video Coding) frames are dropped. This is useful if you want to reduce the processing time or if your VA-API driver does not support those kind of streams.

Encoders #

In this release we have put a lot of effort in encoders.

Processing Regions of Interest #

It is possible, for certain back-ends and profiles (for example, H.264 and H.265

encoders with Intel driver), to specify a set of regions of interest per

frame, with a delta-qp per region. This mean that we would ask more quality in

those regions.

In order to process regions of interest, upstream must add to the video frame, a list of GstVideoRegionOfInterestMeta. This list then is traversed by the encoder and it requests them if the VA-API profile, in the driver, supports it.

The common use-case for this feature is if you want to higher definition in regions with faces or text messages in the picture, for example.

New encoding properties #

-

quality-level: For all the available encoders. This is number between 1 to 8, where a lower number means higher quality (and slower processing). -

aud: This is for H.264 encoder only and it is available for certain drivers and platforms. When it is enabled, an AU delimiter is inserted for each encoded frame. This is useful for network streaming, and more particularly for Apple clients. -

mbbrc: For H.264 only. Controls (auto/on/off) the macro-block bit-rate. -

temporal-levels: For H.264 only. It specifies the number of temporal levels to include a the hierarchical frame prediction. -

prediction-type: For H.264 only. It selects the reference picture selection mode.The frames are encoded as different layers. A frame in a particular layer will use pictures in lower or same layer as references. This means decoder can drop frames in upper layer but still decode lower layer frames.

- hierarchical-p: P frames, except in top layer, are reference frames. Base layer frames are I or B.

- hierarchical-b: B frames , except in top most layer, are reference frames. All the base layer frames are I or P.

-

refs: Added for H.265 (it was already supported for H.264). It specifies the number of reference pictures. -

qp-ipandqp-ib: For H.264 and H.265 encoders. They handle the QP (quality parameters) difference between the I and P frames, the the I and B frames respectively.

Set media profile via downstream caps #

H.264 and H.265 encoders now can configure the desired media profile through the downstream caps.

Contributors #

Many thanks to all the contributors and bug reporters.

1 Daniel van Vugt

46 Hyunjun Ko

1 Jan Schmidt

3 Julien Isorce

1 Matt Staples

2 Matteo Valdina

2 Matthew Waters

1 Michael Tretter

4 Nicolas Dufresne

9 Orestis Floros

1 Philippe Normand

4 Sebastian Dröge

24 Sreerenj Balachandran

1 Thibault Saunier

13 Tim-Philipp Müller

1 Tomas Rataj

2 U. Artie Eoff

1 VaL Doroshchuk

172 Víctor Manuel Jáquez Leal

3 XuGuangxin

2 Yi A Wang