Composited video support in WebKitGTK+

A couple months ago we started to work on adding support for composited video in WebKitGTK+. The objective is to play video in WebKitGTK+ using the hardware accelerated path, so we could play videos at high definition resolutions (1080p).

How does WebKit paint? #

Basically we can perceive a browser as an application for retrieving, presenting and traversing information on the Web.

For the composited video support, we are interested in the presentation task of the browser. More particularly, in the graphical presentation.

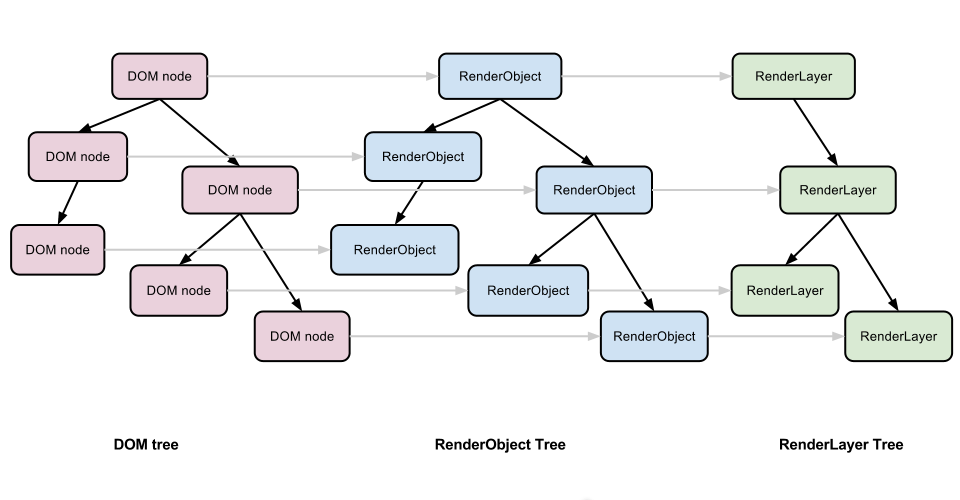

In WebKit, each HTML element on a web page is stored as a tree of Node objects called the DOM tree.

Then, each Node that produces visual output has a corresponding RenderObject, and they are stored in another tree, called the Render Tree.

Finally, each RenderObject is associated with a RenderLayer. These RenderLayers exist so that the elements of the page are composited in the correct order to properly display overlapping content, semi-transparent elements, etc.

It is worth to mention that there is not a one-to-one correspondence between RenderObjects and RenderLayers, and that there is a RenderLayer tree as well.

WebKit fundamentally renders a web page by traversing the RenderLayer tree.

What is the accelerated compositing? #

WebKit has two paths for rendering the contents of a web page: the software path and hardware accelerated path.

The software path is the traditional model, where all the work is done in the main CPU. In this mode, RenderObjects paint themselves into the final bitmap, compositing a final layer which is presented to the user.

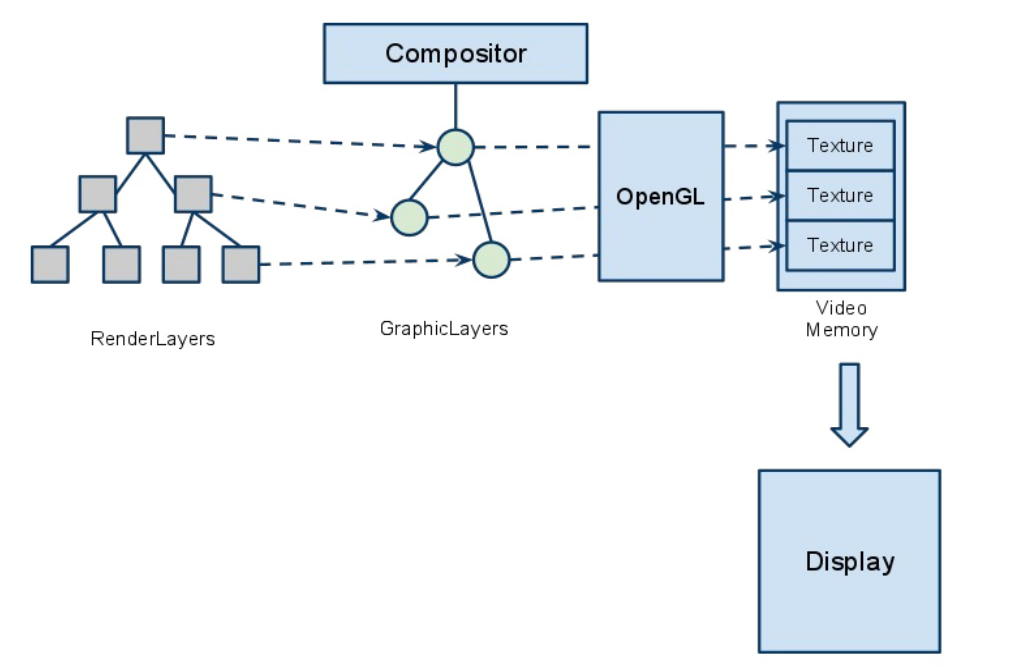

In the hardware accelerated path, some of the RenderLayers get their own backing surface into which they paint. Then, all the backing surfaces are composited onto the destination bitmap, and this task is responsibility of the compositor.

With the introduction of compositing an additional conceptual tree is added: the GraphicsLayer tree, where each RenderLayer may have its own GraphicsLayer.

In the hardware accelerated path, it is used the GPU for compositing some of the RenderLayer contents.

As Iago said, the accelerated compositing, involves offloading the compositing of the GraphicsLayer onto the GPU, since it does the compositing very fast, releasing that burden to the CPU for delivering a better and more responsive user experience.

Although there are other options, typically, OpenGL is used to render computing graphics, interacting with the GPU to achieve hardware acceleration. And WebKit provides cross-platform implementation to render with OpenGL.

How does WebKit paint using OpenGL? #

Ideally, we could go from the GraphicsLayer tree directly to OpenGL, traversing it and drawing the texture-backed layers with a common WebKit implementation.

But an abstraction layer was needed because different GPUs may behave differently, they may offer different extensions, and we still want to use the software path if hardware acceleration is not available.

This abstraction layer is known as the Texture Mapper, which is a light-weight scene-graph implementation, which is specially attuned for an efficient usage of the GPU.

It is a combination of a specialized accelerated drawing context (TextureMapper) and a scene-graph (TextureMapperLayer):

The TextureMapper is an abstract class that provides the necessary drawing primitives for the scene-graph. Its purpose is to abstract different implementations of the drawing primitives from the scene-graph.

One of the implementations is the TextureMapperGL, which provides a GPU-accelerated implementation of the drawing primitives, using shaders compatible with GL/ES 2.0.

There is a TextureMapperLayer which may represent a GraphicsLayer node in the GPU-renderable layer tree. The TextureMapperLayer tree is equivalent to the GraphicsLayer tree.

How does WebKitGTK+ play a video? #

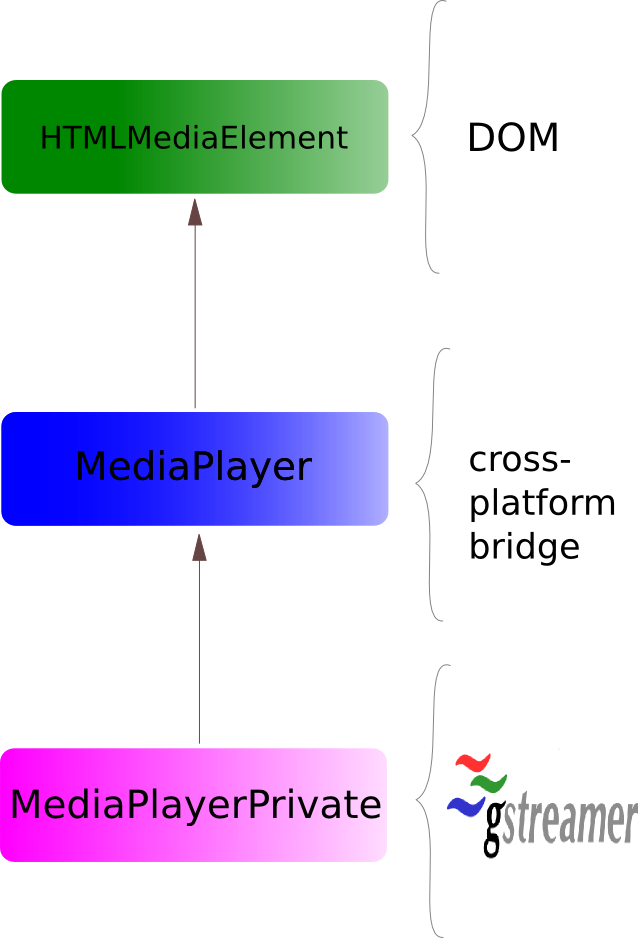

As we stated earlier, in WebKit each HTML element, on a web page, is stored as a Node in the DOM tree. And WebKit provides a Node class hierarchy for all the HTML elements. In the case of the video tag there is a parent class called HTMLMediaElement, which aggregates a common, cross platform, media player. The MediaPlayer is a decorator for a platform-specific media player known as MediaPlayerPrivate.

All previously said is shown in the next diagram.

In the GTK+ port the audio and video decoding is done with GStreamer. In the case of video, a special GStreamer video sink injects the decoded buffers into the WebKit process. You can think about it as a special kind of GstAppSink, and it is part of the WebKitGTK+ code-base.

And we come back to the two paths for content rendering in WebKit:

In the software path the decoded video buffers are copied into a Cairo surface.

But in the hardware accelerated path, the decoded video buffers shall be uploaded into a OpenGL texture. When a new video buffer is available to be shown, a message is sent to the GraphicsLayer asking for redraw.

Uploading video buffers into GL textures #

When we are dealing with big enough buffers, such as the high definition video buffers, copying buffers is a performance killer. That is why zero-copy techniques are mandatory.

Even more, when we are working on a multi-processor environment, such as those where we have a CPU and a GPU, switching buffers among processor’s contexts, is also very expensive.

It is because of these reasons, that the video decoding and the OpenGL texture handling, should happen only in the GPU, without context switching and without copying memory chunks.

The simplest approach could be that decoder deliver an EGLImage, so we could blend the handle into the texture. As far as I know, the gst-omx video decoder in the Raspberry Pi, works in this way.

GStreamer added a new

API,

that will be available in the version 1.2, to upload video buffers into a

texture efficiently: GstVideoGLTextureUploadMeta. This API is exposed through

buffer’s

metadata,

and ought be implemented by any downstream element that deals with the decoded

video frames, most commonly the video decoder.

For example, in gstreamer-vaapi

there are a couple patches

(which still are a work-in-progress) in bugzilla, enabling this API. In the low

level, calling gst_video_gl_texture_upload_meta_upload() will call

vaCopySurfaceGLX(),

which will do an efficient copy of the VA-API surface into a texture using a

GLX extension.

Demo #

This is an old demo, when all the pieces started to fit, but no the current performance. Still, it shows what has been achieved:

Future work #

So far, all these bits are already integrated in WebKitGTK+ and GStreamer. Nevertheless there are some open issues.

- gstreamer-vaapi et all: GStreamer 1.2 is not released yet, and its new API might change. Also, the port of gstreamer-vaapi to GStreamer 1.2 is still a work in progress, where the available patches may have rough areas.Also, there are many other projects that need to be updated with this new API, such as clutter-gst and provide more feedback to the community. Another important thing is to have more GStreamer elements implementing these new API, such as the texture upload and the caps features

- Tearing: The composited video task unveiled a major problem in WebKitGTK+: it does not handle the vertical blank interval at all, causing tearing artifacts, clearly observable in high resolutions videos with high motion.WebKitGTK+ composites the scene off-screen, using X Composite redirected window, and then display it at a X Damage callback, but currently, GTK+ does not take care of the vertical blank interval, causing this tearing artifact in heavy compositions. At Igalia, we are currently researching for a way to fix this issue.

- Performance: There is always room for performance improvement. And we are always aiming in that direction, improving the frame rate, the CPU, GPU and memory usage, et cetera. So, keep tuned, or even better, come and help us.