Introduction to damage propagation in WPE and GTK WebKit ports

Damage propagation is an optional WPE/GTK WebKit feature that — when enabled — reduces browser’s GPU utilization at the expense of increased CPU and memory utilization. It’s very useful especially in the context of low- and mid-end embedded devices, where GPUs are most often not too powerful and thus become a performance bottleneck in many applications.

Basic definitions #

The only two terms that require explanation to understand the feature on a surface level are the damage and its propagation.

The damage #

In computer graphics, the damage term is usually used in the context of repeatable rendering and means essentially “the region of a rendered scene that changed and requires repainting”.

In the context of WebKit, the above definition may be specialized a bit as WebKit’s rendering engine is about rendering web content to frames (passed further to the platform) in response to changes within a web page. Thus the definition of WebKit’s damage refers, more specifically, to “the region of web page view that changed since previous frame and requires repainting”.

On the implementation level, the damage is almost always a collection of rectangles that cover the changed region. This is exactly the case for WPE and GTK WebKit ports.

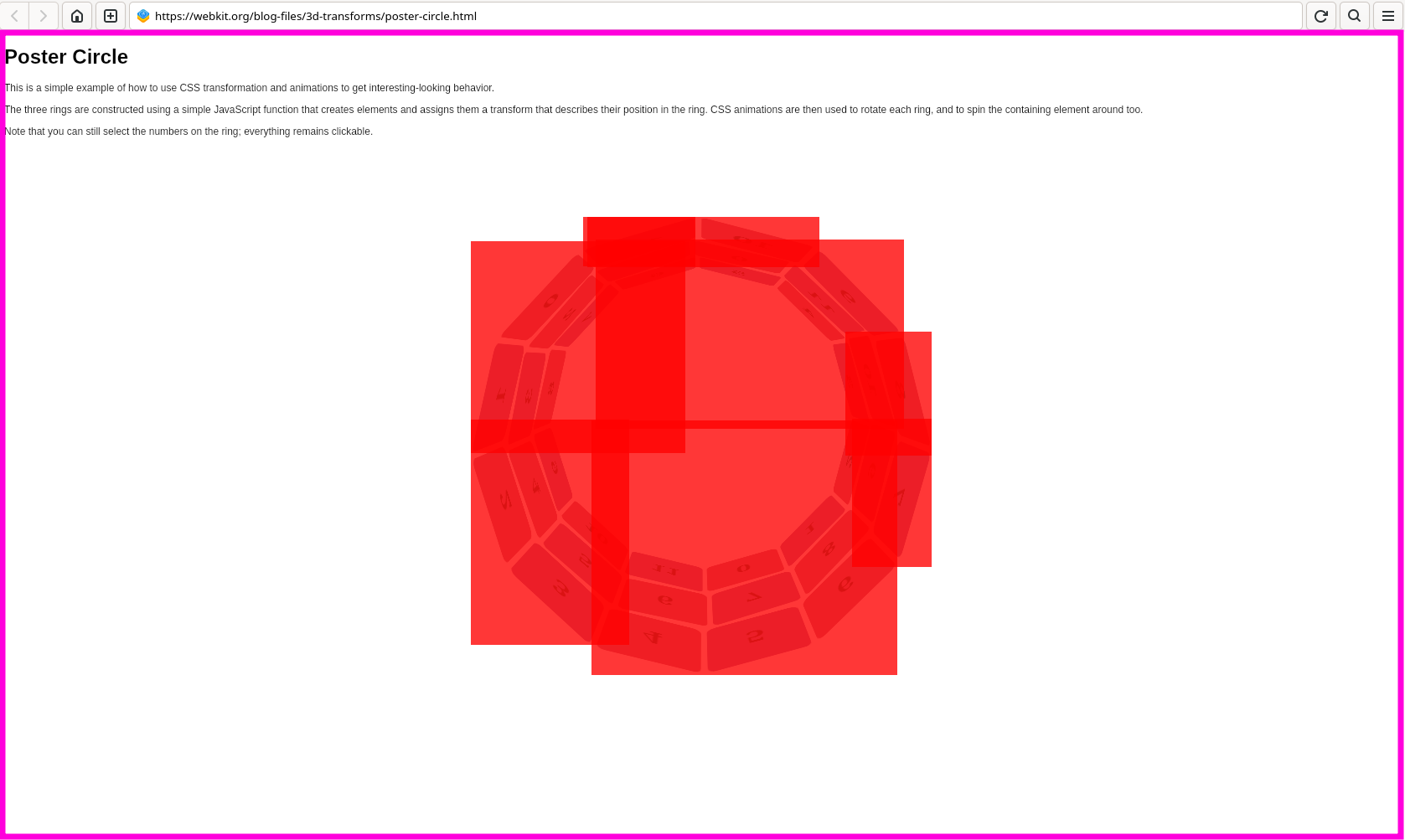

To better understand what the above means, it’s recommended to carefully examine the below screenshot of GTK MiniBrowser as it depicts the rendering of the poster circle demo

with the damage visualizer activated:

- the web page view — marked with a rectangle stroked to magenta color,

- the damage — marked with red rectangles,

- the browser elements — everything that lays above the rectangle stroked to a magenta color.

What the above image depicts in practice, is that during that particular frame rendering, the area highlighted red (the damage) has changed and needs to be repainted. Thus — as expected — only the moving parts of the demo require repainting. It’s also worth emphasizing that in that case, it’s also easy to see how small fraction of the web page view requires repainting. Hence one can imagine the gains from the reduced amount of painting.

The propagation #

Normally, the job of the rendering engine is to paint the contents of a web page view to a frame (or buffer in more general terms) and provide such rendering result to the platform on every scene rendering iteration — which usually is 60 times per second. Without the damage propagation feature, the whole frame is marked as changed (the whole web page view) always. Therefore, the platform has to perform the full update of the pixels it has 60 times per second.

While in most of the use cases, the above approach is good enough, in the case of embedded devices with less powerful GPUs, this can be optimized. The basic idea is to produce the frame along with the damage information i.e. a hint for the platform on what changed within the produced frame. With the damage provided (usually as an array of rectangles), the platform can optimize a lot of its operations as — effectively — it can perform just a partial update of its internal memory. In practice, this usually means that fewer pixels require updating on the screen.

For the above optimization to work, the damage has to be calculated by the rendering engine for each frame and then propagated along with the produced frame up to its final destination. Thus the damage propagation can be summarized as continuous damage calculation and propagation throughout the web engine.

Damage propagation pipeline #

Once the general idea has been highlighted, it’s possible to examine the damage propagation in more detail. Before reading further, however, it’s highly recommended for the reader to go carefully through the famous “WPE Graphics architecture” article that gives a good overview of the WebKit graphics pipeline in general and which introduces the basic terminology used in that context.

Pipeline overview #

The information on the visual changes within the web page view has to travel a very long way before it reaches the final destination. As it traverses the thread and process boundaries in an orderly manner, it can be summarized as forming a pipeline within the broader graphics pipeline. The image below presents an overview of such damage propagation pipeline:

Pipeline details #

This pipeline starts with the changes to the web page view visual state (RenderTree) being triggered by one of many possible sources. Such sources may include:

- User interactions — e.g. moving mouse cursor around (and hence hovering elements etc.), typing text using keyboard etc.

- Web API usage — e.g. the web page changing DOM, CSS etc.

- multimedia — e.g. the media player in a playing state,

- and many others.

Once the changes are induced for certain RenderObjects, their visual impact is calculated and encoded as rectangles called dirty as they require re-painting within a GraphicsLayer the particular RenderObject maps to. At this point, the visual changes may simply be called layer damage as the dirty rectangles are stored in the layer coordinate space and as they describe what changed within that certain layer since the last frame was rendered.

The next step in the pipeline is passing the layer damage of each GraphicsLayer (GraphicsLayerCoordinated) to the WebKit’s compositor. This is done along with any other layer updates and is mostly covered by the CoordinatedPlatformLayer. The “coordinated” prefix of that name is not without meaning. As threaded accelerated compositing is usually used nowadays, passing the layer damage to the WebKit’s compositor must be coordinated between the main thread and the compositor thread.

When the layer damage of each layer is passed to the WebKit’s compositor, it’s stored in the TextureMapperLayer that corresponds to the given layer’s CoordinatedPlatformLayer. With that — and with all other layer-level updates — the WebKit’s compositor can start computing the frame damage i.e. damage that is the final damage to be passed to the very end of the pipeline.

The first step to building frame damage is to process the layer updates. Layer updates describe changes of various layer properties such as size, position, transform, opacity, background color, etc. Many of those updates have a visual impact on the final frame, therefore a portion of frame damage must be inferred from those changes. For example, a layer’s transform change that effectively changes the layer position means that the layer visually disappears from one place and appears in the other. Thus the frame damage has to account for both the layer’s old and new position.

Once the layer updates are processed, WebKit’s compositor has a full set of information to take the layer damage of each layer into account. Thus in the second step, WebKit’s compositor traverses the tree formed out of TextureMapperLayer objects and collects their layer damages. Once the layer damage of a certain layer is collected, it’s transformed from the layer coordinate space into a global coordinate space so that it can be added to the frame damage directly.

After those two steps, the frame damage is ready. At this point, it can be used for a couple of extra use cases:

- for WebKit’s compositor itself to perform some extra optimizations — as will be explained in the WebKit’s compositor optimizations section,

- for layout tests.

Eventually — regardless of extra uses — the WebKit’s compositor composes the frame and sends it (a handle to it) to the UI Process along with frame damage using the IPC mechanism.

In the UI process, there are basically two options determining frame damage destiny — it can be either consumed or ignored — depending on the platform-facing implementation. At the moment of writing:

- GTK port will consume the damage (see (…)/gtk/AcceleratedBackingStoreDMABuf.cpp)

- WPE port will consume the damage only if the new WPE platform API is used along with the following platforms:

- Wayland (see (…)/WPEPlatform/wpe/wayland/WPEViewWayland.cpp)

- DRM (see (…)/WPEPlatform/wpe/drm/WPEViewDRM.cpp)

Once the frame damage is consumed, it means that it reached the platform and thus the pipeline ends for that frame.

Current status of the implementation #

At the moment of writing, the damage propagation feature is run-time-disabled by default (PropagateDamagingInformation feature flag) and compile-time enabled by default for GTK and WPE (with new platform API) ports.

Overall, the feature works pretty well in the majority of real-world scenarios. However, there are still some uncovered code paths that lead to visual glitches. Therefore it’s fair to say the feature is still a work in progress.

The work, however, is pretty advanced. Moreover, the feature is set to a testable state and thus it’s active throughout all the layout test runs on CI.

Not only the feature is tested by every layout test that tests any kind of rendering, but it also has quite a lot of dedicated layout tests.

Not to mention the unit tests covering the Damage class.

In terms of functionalities, when the feature is enabled it:

- activates the damage propagation pipeline and hence propagates the damage up to the platform,

- activates additional WebKit-compositor-level optimizations.

Damage propagation #

When the feature is enabled, the main goal is to activate the damage propagation pipeline so that eventually the damage can be provided to the platform. However, in reality, a substantial part of the pipeline is always active regardless of the features being enabled or compiled. This part of the pipeline ends before the damage reaches CoordinatedPlatformLayer and is always active because it was used for layer-level optimizations for a long time. More specifically — this part of the pipeline existed long before the damage propagation feature and was using layer damage to optimize the layer painting to the intermediate surfaces.

Because of the above, when the feature is enabled, only the part of the pipeline that starts with CoordinatedPlatformLayer is activated. It is, however, still a significant portion of the pipeline and therefore it implies additional CPU/memory costs.

WebKit’s compositor optimizations #

When the feature is activated and the damage flows through the WebKit’s compositor, it creates a unique opportunity for the compositor to utilize that information and reduce the amount of painting/compositing it has to perform. At the moment of writing, the GTK/WPE WebKit’s compositor is using the damage to optimize the following:

- to apply global

glScissorto define the smallest possible clipping rect for all the painting it does — thus reducing the amount of painting, - to reduce the amount of painting when compositing the tiles of the layers using tiled backing stores.

Detailed descriptions of the above optimizations are well beyond the scope of this article and thus will be provided in one of the next articles on the subject of damage propagation.

Trying it out #

As mentioned in the above sections, the feature only works in the GTK and the new-platform-API-powered WPE ports. This means that:

- In the case of GTK, one can use MiniBrowser or any up-to-date GTK-WebKit-derived browser to test the feature.

- In the case of WPE with the new WPE platform API the cog browser cannot be used as it uses the old API. Therefore, one has to use MiniBrowser

with the

--use-wpe-platform-apiargument to activate the new WPE platform API.

Moreover, as the feature is run-time-disabled by default, it’s necessary to activate it. In the case of MiniBrowser, the switch is --features=+PropagateDamagingInformation.

Building & running the GTK MiniBrowser #

For quick testing, it’s highly recommended to use the latest revision of WebKit@main with wkdev SDK container and with GTK port. Assuming one has set up the container, the commands to build and run GTK’s MiniBrowser are as follows:

# building:

./Tools/Scripts/build-webkit --gtk --release

# running with visualizer

WEBKIT_SHOW_DAMAGE=1 \

Tools/Scripts/run-minibrowser \

--gtk --release --features=+PropagateDamagingInformation \

'https://webkit.org/blog-files/3d-transforms/poster-circle.html'

# running without visualizer

Tools/Scripts/run-minibrowser \

--gtk --release --features=+PropagateDamagingInformation \

'https://webkit.org/blog-files/3d-transforms/poster-circle.html'Building & running the WPE MiniBrowser #

Alternatively, a WPE port can be used. Assuming some Wayland display is available, the commands to build and run the MiniBrowser are the following:

# building:

./Tools/Scripts/build-webkit --wpe --release

# running with visualizer

WEBKIT_SHOW_DAMAGE=1 \

Tools/Scripts/run-minibrowser \

--wpe --release --use-wpe-platform-api --features=+PropagateDamagingInformation \

'https://webkit.org/blog-files/3d-transforms/poster-circle.html'

# running without visualizer

Tools/Scripts/run-minibrowser \

--wpe --release --use-wpe-platform-api --features=+PropagateDamagingInformation \

'https://webkit.org/blog-files/3d-transforms/poster-circle.html'Trying various URLs #

While any URL can be used to test the feature, below is a short list of recommendations to check:

- https://igalia.com — great for testing regular web page interactions and scrolling,

- https://webkit.org/blog-files/3d-transforms/poster-circle.html — great to see CSS transformations and animations handling,

- https://scony.github.io/web-examples/canvas-2d/drawing-noise-in-moving-rect.html — great to see how damage works with canvas using CanvasRenderingContext2D.

Please note that at the moment accelerated canvas is not supported and hence the

,-CanvasUsesAcceleratedDrawingmust be added to the--features=(...)list.

It’s also worth mentioning that WEBKIT_SHOW_DAMAGE=1 environment variable disables damage-driven GTK/WPE WebKit’s compositor optimizations and therefore some glitches that are seen without the envvar, may not be seen

when it is set. The URL to this presentation is a great example to explore various glitches that are yet to be fixed. To trigger them, it’s enough to navigate

around the presentation using top/right/down/left arrows.

Coming up next #

This article was meant to scratch the surface of the broad, damage propagation topic. While it focused mostly on introducing basic terminology and describing the damage propagation pipeline in more detail, it briefly mentioned or skipped completely the following aspects of the feature:

- the problem of storing the damage information efficiently,

- the damage-driven optimizations of the GTK/WPE WebKit’s compositor,

- the most common use cases for the feature,

- the benchmark results on desktop-class and embedded devices.

Therefore, in the next articles, the above topics will be examined to a larger extent.

References #

- The new WPE platform API is still not released and thus it’s not yet officially announced. Some information on it, however, is provided by this presentation prepared for a WebKit contributors meeting.

- The platform that the WebKit renders to depends on the WebKit port:

- in case of GTK port, the platform is GTK so the rendering is done to GtkWidget,

- in case of WPE port with new WPE platform API, the platform is one of the following:

- wayland — in that case rendering is done to the system’s compositor,

- DRM — in that case rendering is done directly to the screen,

- headless — in that case rendering is usually done into memory buffer.