The thrilling story about the H.239 support in Ekiga

The development of Ekiga 4.0 has begun, and three months ago I started a project aimed to support H.239 in Ekiga.H.239 is an ITU recommendation and it is titled “Role management and additional media channels for H.300-series terminals”. Its purpose is the definition of procedures for use more than one video channel, and for labelling those channels with a role.

A traditional video-conference has an audio channel, a video channel and an optional data channel. The video channel typically carries the camera image of the participants. The H.239 recommendation defines rules and messages for establishing an additional video channel, often to transmit presentation slides, while still transmitting the video of the presenter.

For presentations in multi-point conferencing, H.239 defines token procedures to guarantee that only one endpoint in the conference sends the additional video channel which is then distributed to all conference participants.

Ekiga depends on Opal, a library that implements, among other things, the protocols used to send voice over IP networks (VoIP). And, according to the documentation, Opal had already support for H.239 since a while, for what we assumed that enable it in Ekiga would be straight forward.

After submitting a few patches to Opal and its base library, PTlib, then I setup a jhbuild environment to automate the building of Ekiga and its dependencies. Soon, I realized that the task was not going to be as simple as we initially assumed: the support for H.323, in Ekiga, is not as mature as the SIP support.

I have to mention that along the development process, in order to test my scratched code, I used this H.239 application sample, a small MS Windows program which streams two video channels (the main role and the slides role). The good news is that it works fine in Wine.

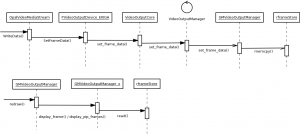

Well, the true is that the activation of the client support for H.239 in Ekiga was easy. The real problem began with the video output mechanism. Ekiga has a highly complex design for drawing video frames. Too complex in my opinion. One of the first thing I did to understand the video frames display, was to sketch a sequence diagram from when a decoded frame is delivered by Opal to when the frame is displayed in the screen. And here is the result:

As I said, it is too complex for me, but the second stream had to be displayed. Fearfully, I started to refactor some code inside Ekiga and adding more parameters to handle another video stream. But finally, I just stepped forward, and submitted a bunch of patches that finally displays a second stream.

The current outcome is shown in the next screen cast: Screencast Ekiga H.239 (OGV / 7.8M)

- Previous: A GStreamer Video Sink using KMS

- Next: Weekly Igalia's report on WebKit