OpenGL is an API for rendering 2D and 3D vector graphics now managed by the non-profit technology consortium Khronos Group. This is a multi-platform API found in different form factor devices (from desktop computer to embedded devices) and operating systems (GNU/Linux, Microsoft Windows, Mac OS X, etc).

OpenGL is an API for rendering 2D and 3D vector graphics now managed by the non-profit technology consortium Khronos Group. This is a multi-platform API found in different form factor devices (from desktop computer to embedded devices) and operating systems (GNU/Linux, Microsoft Windows, Mac OS X, etc).

As Khronos only defines OpenGL API, the implementors are free to write the OpenGL implementation they wish. For example, when talking about GNU/Linux systems, NVIDIA provides its own proprietary libraries while other manufacturers like Intel are using Mesa, one of the most popular open source OpenGL implementations.

Because of this implementation freedom, we need a way to check that they follow OpenGL specifications. Khronos provides their own OpenGL conformance test suite but your company needs to become a Khronos Adopter member to have access to it. However there is an unofficial open source alternative: piglit.

Piglit

Piglit is an open-source OpenGL implementation conformance test suite created by Nicolai Hähnle in 2007. Since then, it has increased the number of tests covering different OpenGL versions and extensions: today a complete piglit run executes more than 35,000 tests.

Piglit is one of the tools widely used in Mesa to check that the commits adding new functionality or modifying the source code don't break the OpenGL conformance. If you are thinking in contributing to Mesa, this is definitely one of the tools you want to master.

How to compile piglit

Before compiling piglit, you need to have the following dependencies installed on your system. Some of them are available in modern GNU/Linux distributions (such as Python, numpy, make...), while others you might need to compile them (waffle).

- Python 2.7.x

- Python mako module

- numpy

- cmake

- GL, glu and glut libraries and development packages (i.e. headers)

- X11 libraries and development packages (i.e. headers)

- waffle

But waffle is not available in Debian/Ubuntu repositories, so you need to compile it manually and, optionally, install it in the system:

$ git clone git://github.com/waffle-gl/waffle

$ cmake . -Dwaffle_has_glx=1

$ make

$ sudo make installPiglit is a project hosted in Freedesktop. To download it, you need to have installed git in your system, then run the corresponding git-clone command:

$ git clone git://anongit.freedesktop.org/git/piglitOnce it finishes cloning the repository, just compile it:

$ cmake .

$ makeMore info in the documentation.

As a result, all the test binaries are inside bin/ directory and it's possible to run them standalone... however there are scripts to run all of them in a row.

Your first piglit run

After you have downloaded piglit source code from its git repository and compiled it , you are ready to run the testing suite.

First of all, make sure that everything is correctly setup:

$ ./piglit run tests/sanity.tests results/sanity.resultsThe results will be inside results/sanity.results directory. There is a way to process those results and show them in a human readable output but I will talk about it in the next point.

If it fails, most likely it is because libwaffle is not found in the path. If everything went fine, you can execute the piglit test suite against your graphics driver.

$ ./piglit run tests/all results/all-reference

Remember that it's going to take a while to finish, so grab a cup of coffee and enjoy it.

Analyze piglit output

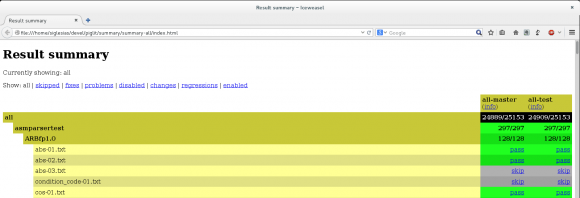

Piglit provides several tools to convert the JSON format results in a more readable output: the CLI output tool (piglit-summary.py) and the HTML output tool (piglit-summary-html.py). I'm going to explain the latter first because its output is very easy to understand when you are starting to use this test suite.

You can run these scripts standalone but piglit binary calls each of them depending of its arguments. I am going to use this binary in all the examples because it's just one command to remember.

HTML output

In order to create an HTML output of a previously saved run, the following command is what you need:

$ ./piglit summary html --overwrite summary/summary-all results/all-reference

- You can append more results at the end if you would like to compare them. The first one is the reference for the others, like when counting the number of regressions.

$ ./piglit summary html --overwrite summary/summary-all results/all-master results/all-test

- The overwrite argument is to overwrite summary destination directory contents if they have been already created.

Finally open the HTML summary web page in a browser:

$ firefox summary/summary-all/index.html

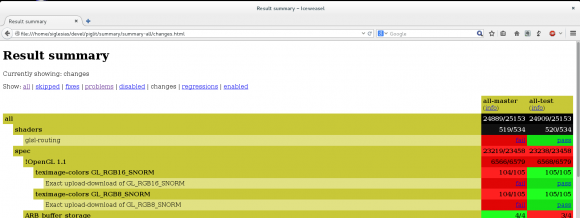

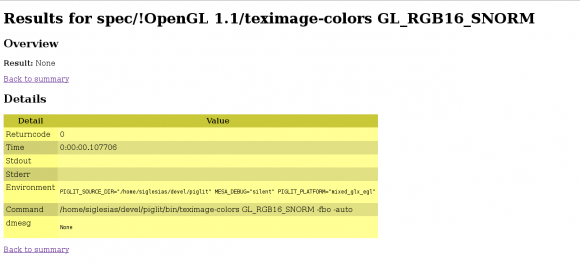

Each test has a background color depending of the result: red (failed), green (passed), orange (warning), grey (skipped) or black (crashed). If you click on its respective link at the right column, you will see the output of that test and how to run it standalone.

There are more pages:

- skipped.html: it lists all the skipped tests.

- fixes.html: it lists all the tests fixed that before failed.

- problems.html: it lists all the failing tests.

- disabled.html: it shows executed tests before but now skipped.

- changes.html: when you comparetwoormoredifferentpiglit runs, this page shows all the changes comparing the new results with the reference (first results/ argument):

- Previously skipped tests and now executed (although the result could be either fail, crash or pass).

- It also includes all regressions.html data.

- Any other change of the tests compared with the reference: crashed tests, passed tests that before were failing or skipped, etc.

- regressions.html: when you compare two or more different piglit runs, this page shows the number of previously passed tests that now fail.

- enabled.html: it lists all the executed tests.

I recommend you to explore which pages are available and what kind of information each one provides. There are more pages like info which is at the first row of each results column on the right most of the screen and it gathers all the information about hardware, drivers, supported OpenGL version, etc.

Test details

As I said before, you can see what kind of error output (if any) a test has written, spent time on execution and which kind of arguments were given to the binary.

There is also a dmesg field which shows the kernel errors that appeared in each test execution. If these errors are graphics driver related, you can easily detect which test was guilty. To enable this output, you need to add --dmesg argument to piglit run but I will explain this and other parameters in next post.

There is also a dmesg field which shows the kernel errors that appeared in each test execution. If these errors are graphics driver related, you can easily detect which test was guilty. To enable this output, you need to add --dmesg argument to piglit run but I will explain this and other parameters in next post.

Text output

The usage of the CLI tool is very similar to HTML one except that its output appears in the terminal.

$ ./piglit summary console results/all-reference

As its output is not saved in any file, there is not argument to save it in a directory and there is no overwrite arguments either.

Like HTML-output tool, you can append several result files to do a comparison between them. The tool will output one line per test together with its result (pass, fail, crash, skip) and a summary with all the stats at the end.

As it prints the output in the console, you can take advantage of tools like grep to look for specific combinations of results

$ ./piglit summary console results/all-reference | grep fail

This is an example of an output of this command:

$ ./piglit summary console results/all-reference

[...]

spec/glsl-1.50/compiler/interface-block-name-uses-gl-prefix.vert: pass

spec/EXT_framebuffer_object/fbo-clear-formats/GL_ALPHA16 (fbo incomplete): skip

spec/ARB_copy_image/arb_copy_image-targets GL_TEXTURE_CUBE_MAP_ARRAY 32 32 18 GL_TEXTURE_2D_ARRAY 32 16 15 11 12 5 5 1 2 14 15 9: pass

spec/glsl-1.30/execution/built-in-functions/fs-op-bitxor-uvec3-uint: pass

spec/ARB_depth_texture/depthstencil-render-miplevels 146 d=z16: pass

spec/glsl-1.10/execution/variable-indexing/fs-varying-mat2-col-row-rd: pass

summary:

pass: 25085

fail: 262

crash: 5

skip: 9746

timeout: 0

warn: 13

dmesg-warn: 0

dmesg-fail: 0

total: 35111

And this is the output when you compare two different piglit results:

$ ./piglit summary console results/all-reference results/all-test

[...]

spec/glsl-1.50/compiler/interface-block-name-uses-gl-prefix.vert: pass pass

spec/glsl-1.30/execution/built-in-functions/fs-op-bitxor-uvec3-uint: pass pass

summary:

pass: 25023

fail: 548

crash: 7

skip: 8264

timeout: 0

warn: 15

dmesg-warn: 0

dmesg-fail: 0

changes: 376

fixes: 2

regressions: 2

total: 33857

Output for Jenkins-CI

There is another script (piglit-summary-junit.py) that produces results in a format that Jenkins-CI understands which is very useful when you have this continuous integration suite running somewhere. As I have not played with it yet, I keep it as an exercise for readers.

Conclusions

Piglit is an open-source OpenGL implementation conformance test suite widely use in projects like Mesa.

In this post I explained how to compile piglit, run it and convert the result files to a readable output. This is very interesting when you are testing your last Mesa patch before submission to the development mailing list or when you are looking for regressions in the last stable version of your graphics device driver.

Next post will cover how to run specific tests in piglit and explain some arguments very interesting for specific cases. Stay tuned!