Storing and viewing local test results in ResultDB

This post was originally meant to be the final part of my series about Swarming, but I decided to split it into two because it got quite long. I’ll first only introduce ResultDB and show how to upload results from local test runs, and then the next post will explain how to store results from Swarming runs in ResultDB.

ResultDB and filtering results

To quote from the ResultDB docs, it “is a […] service for storing and retrieving test results.” It gives you a nice graphical view of the test results, and also has an API which is used for “nearly all pass-fail decisions on the builders”. We won’t use the API, though.

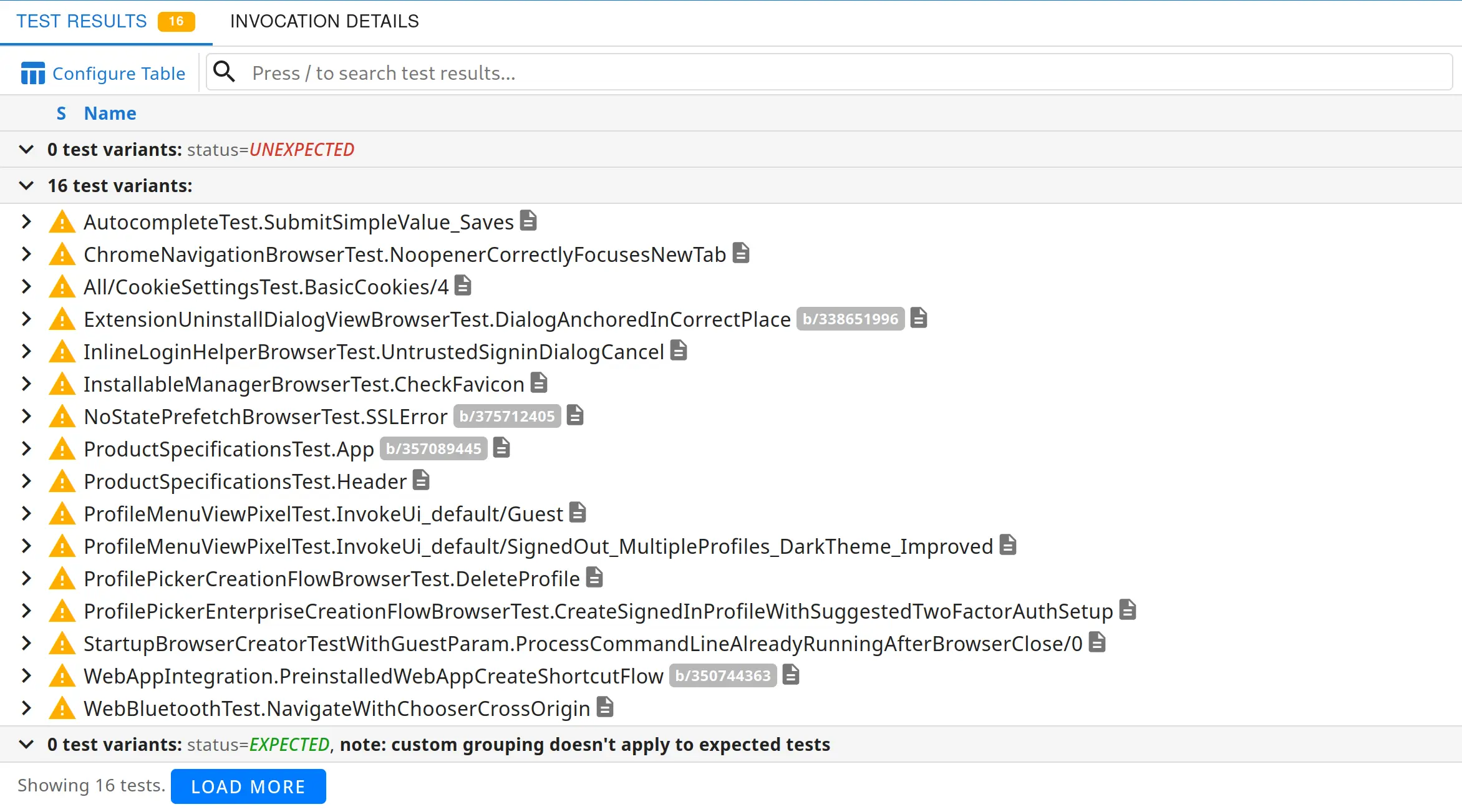

If you’ve looked at the test results of a CQ dry run before, you’ve already used ResultDB:

Graphical overview of a CQ dry run’s test results in ResultDB

One reason why ResultDB is so useful is that it makes test results easily searchable. You can filter

by test name, test suite, step name, and even test duration. The search bar is at the top of the

page, and you can also focus it by pressing /.

Once you focus the search bar, it tells you to “Press ↓ to see suggestions”. Doing so will show you

the list of available filters. I recommend checking those out yourself, but I’ll summarize my

favourites below with examples. In general, you can combine multiple filters by separating them with

a space, and negate a filter by prefixing it with a -. Also note the blue “Load more” button at

the bottom of the screenshot above: not all test results are loaded by default, and only the loaded

results are searched.

The default search type when you just start typing is to filter by test ID or name. For GTest tests,

the name consists of the test’s test suite name (in the GTest sense, not something like

interactive_ui_tests), then the test fixture class if it exists, then the test case name, and

finally the test variant index if it exists. For example, if you define a test case like so:

// ...

;

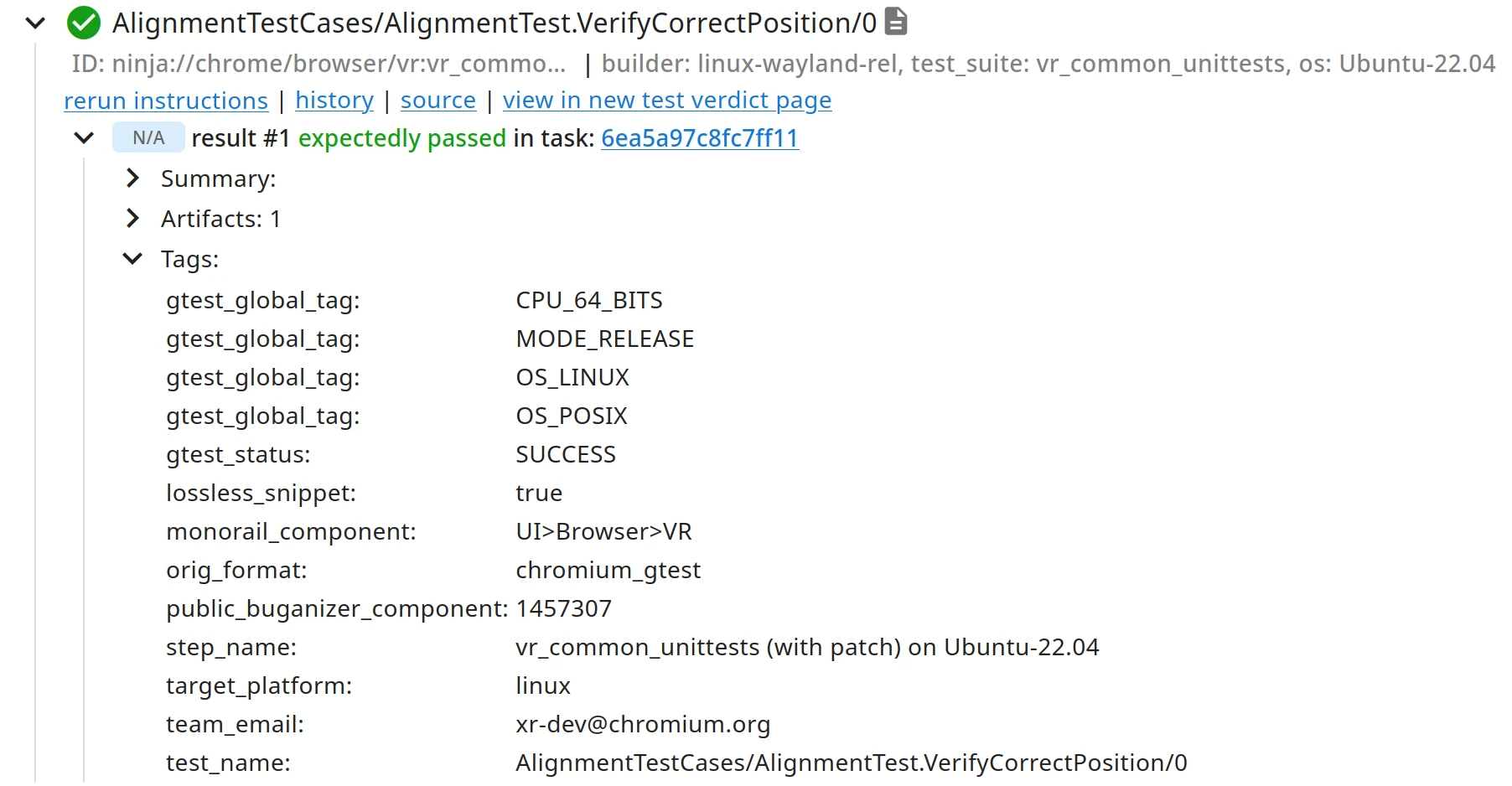

the name of its first variant will be AlignmentTestCases/AlignmentTest.VerifyCorrectPosition/0.

In almost all cases, a test’s ID consists of the prefix ninja: followed by the GN path of the

target the test is part of, and then the same components as in the name, although sometimes in

different order and with different separators. For the same test as above, the ID is

ninja://chrome/browser/vr:vr_common_unittests/AlignmentTest.VerifyCorrectPosition/AlignmentTestCases.0,

as it’s part of the test(vr_common_unittests) target in chrome/browser/vr/BUILD.gn.

If we expand the view for that test in ResultDB, we can see there’s more metadata, most of which is also searchable. Note that not all of the following might be available for results you uploaded yourself, though; test results from CI/CQ runs have some additional metadata like which build step a test belongs to.

Test metadata in ResultDB

In case you want to filter by test suite (in the “test binary name” sense), you can filter by

“variant key-value pair”. In the screenshot above, these pairs are listed in gray right below the

test name, after the ID. For example, if you’d like to only view the test results for all

vr_common_unittests tests, search for v:test_suite=vr_common_unittests.

The tags that you can see above are also searchable. For me the most useful tag is the step name,

which you can use to filter out test results from retries, e.g.

-tag:step_name=interactive_ui_tests%20(retry%20shards%20with%20patch)%20on%20Ubuntu-22.04 (note

the URL-encoded spaces) combined with v:test_suite=interactive_ui_tests will show you all

interactive_ui_tests results excluding those from retries.

Uploading results from local runs

Uploading to ResultDB happens via ResultSink, which “is a local HTTP server that proxies

requests to ResultDB’s backend.” (source) It’s launched via rdb stream.

For most test types (including unittests, browser_tests, and interactive_ui_tests), the

result_adapter tool can be used to parse the test results JSON file and send the data to

ResultSink. If you’re interested in e.g. Blink’s web tests, you can check the “ResultSink

integration/wrappers within Chromium” section of the docs for how that works.

For the rest of this post, we’ll use result_adapter.

To be able to upload the results from a test run, you’ll need to launch the tests with

--test-launcher-summary-output set to a path to a JSON file to store the results, like so:

Now we can use rdb stream to upload the results stored in the JSON file:

rdb stream -new does two things: it starts the ResultSink server, and it creates a new ResultDB

invocation, which is what ResultDB calls a set of test results. Every invocation must be in a

realm, which in our case we’ll always set to chromium:try.

After its parameters, rdb stream takes another command that it will execute while providing the

ResultSink server. In our case that’s result_adapter, which you’ll need to tell where to find the

test results via -result-file as well as the results’ format, which usually is gtest.

result_adapter is also meant to wrap a command, usually the one that runs the tests and creates

the result JSON file – but in our case that has already happened, so we just pass a dummy echo

invocation to make result_adapter happy.

Running rdb stream -new might require you to run luci-auth login first. It should print an error

message with the exact luci-auth invocation when you run it for the first time.

When logged in, running the above command should print something like this:

rdb-stream: created invocation - https://luci-milo.appspot.com/ui/inv/u-igalia-2024-12-11-17-14-00-e83b2fb432f5d378

[W2024-12-11T18:14:36.705083+01:00 151647 0 deadline.go:161] AdjustDeadline without Deadline in LUCI_CONTEXT. Assuming Deadline={grace_period: 30.00}

[I2024-12-11T18:14:36.706660+01:00 151647 0 sink.go:276] SinkServer: warm-up started

[I2024-12-11T18:14:36.706761+01:00 151647 0 sink.go:346] SinkServer: starting HTTP server...

[I2024-12-11T18:14:36.709869+01:00 151647 0 sink.go:281] SinkServer: warm-up ended

[I2024-12-11T18:14:36.710154+01:00 151647 0 cmd_stream.go:492] rdb-stream: starting the test command - ["tools/resultdb/result_adapter" "gtest" "-result-file" "test-results.json" "--" "echo"]

Warning: no '=' in invocation-link-artifacts pair: "", ignoring

[I2024-12-11T18:14:36.783053+01:00 151647 0 cmd_stream.go:488] rdb-stream: the test process terminated

[I2024-12-11T18:14:36.783112+01:00 151647 0 sink.go:371] SinkServer: shutdown started

[I2024-12-11T18:14:36.783155+01:00 151647 0 sink.go:349] SinkServer: HTTP server stopped with "http: Server closed"

[I2024-12-11T18:14:36.783177+01:00 151647 0 sink_server.go:96] SinkServer: draining TestResult channel started

[I2024-12-11T18:14:37.227940+01:00 151647 0 sink_server.go:98] SinkServer: draining TestResult channel ended

[I2024-12-11T18:14:37.227999+01:00 151647 0 sink_server.go:100] SinkServer: draining Artifact channel started

[I2024-12-11T18:14:37.832039+01:00 151647 0 sink_server.go:102] SinkServer: draining Artifact channel ended

[I2024-12-11T18:14:37.832149+01:00 151647 0 sink.go:374] SinkServer: shutdown completed successfully

[I2024-12-11T18:14:37.832286+01:00 151647 0 cmd_stream.go:420] rdb-stream: exiting with 0

[I2024-12-11T18:14:37.832581+01:00 151647 0 cmd_stream.go:445] Caught InterruptEvent

[I2024-12-11T18:14:37.832637+01:00 151647 0 terminate_unix.go:32] Sending syscall.SIGTERM to subprocess

[W2024-12-11T18:14:37.832663+01:00 151647 0 cmd_stream.go:451] Could not terminate subprocess (os: process already finished), cancelling its context

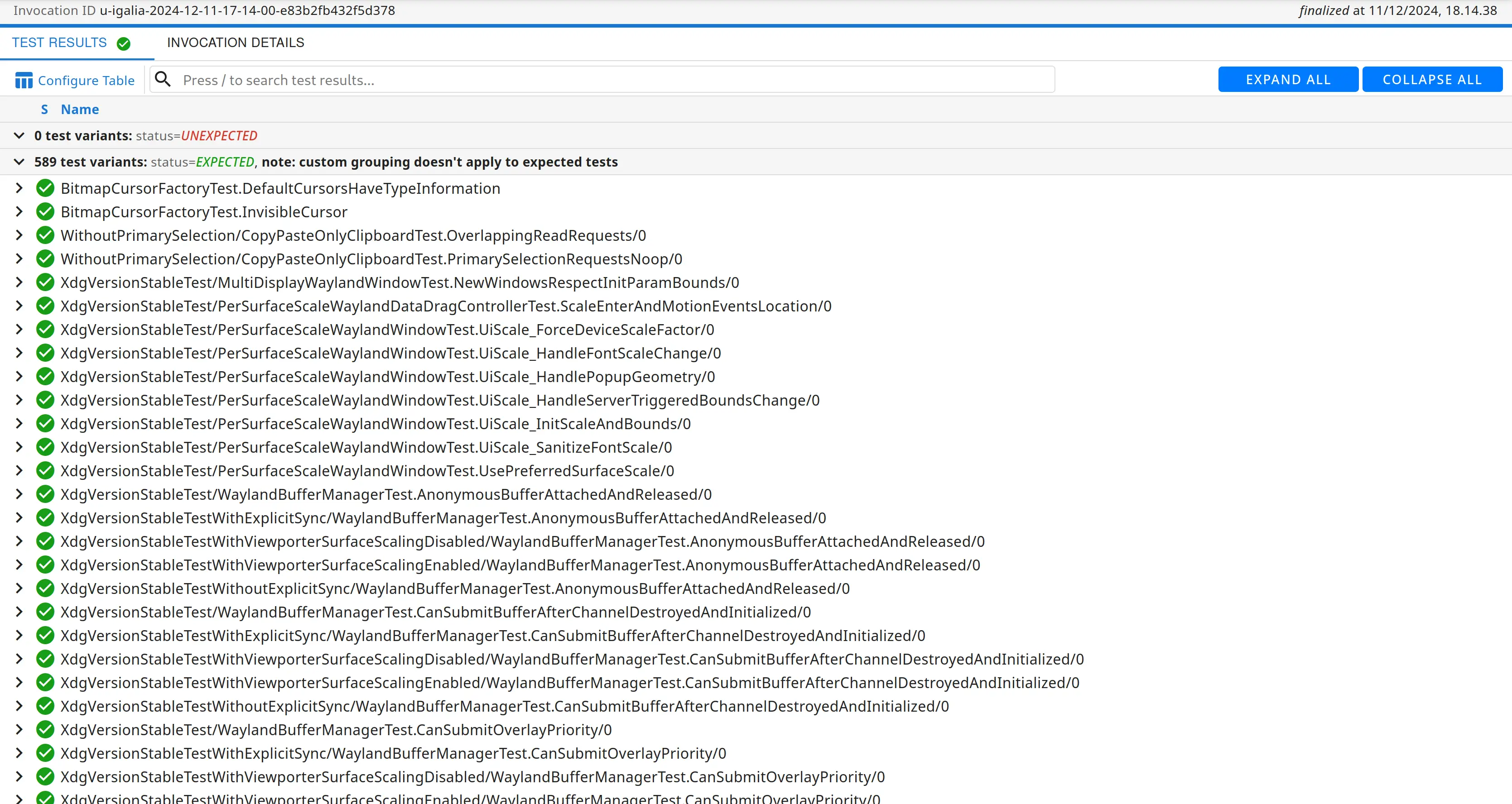

rdb-stream: finalized invocation - https://luci-milo.appspot.com/ui/inv/u-igalia-2024-12-11-17-14-00-e83b2fb432f5d378

Most of the output isn’t really useful, but what we’re interested in is the link to the invocation at the very end: this is where we can actually view the graphical version of the test results.

ResultDB displaying the results of our local ozone_unittests

run

We now have a nicely navigable view of our results, can use all of ResultDBs filtering features, and we also have a link we can share with others to make our results available to them.

That’s it for this post – stay tuned for the next one where we’ll integrate Swarming with ResultDB!