It’s amazing to think about how much computing goes into something as simple as a keystroke that we just take for granted. Recently, I was fixing a bug related to accessibility key events, and to do this, first I had to understand the complex trip that these events take when they arrive to the browser – from the X server until they reach the accessibility system.

Let me start from the beginning. I’m working on the accessibility of the Chromium browser on Linux. The bug was #1042864: key strokes happening on native dialogs, like open and save dialogs, were not reported to the screen reader. The issue also affects Electron-based software, one important example is Visual Studio Code.

A cake with many layers

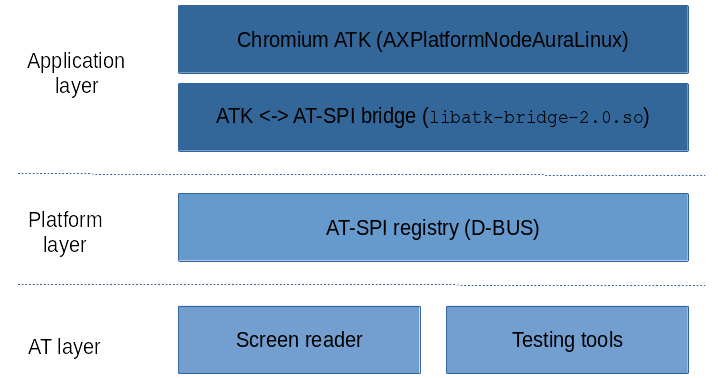

Linux accessibility is composed of many layers, and this fact stands out when working on a complex piece of software like Chromium which comes with its own UI toolkit.

Currently, the most straight-forward way to build accessible applications for the Linux desktop is using and implementing the hooks provided by the ATK (Accessibility Toolkit) API. GTK+ widgets already do so, applications using them will be accessible by default, but more complex software that implements custom widgets or its own toolkit will need to provide the code for the ATK entry points and emit the corresponding events. That’s the case of Chromium!

The screen reader or any Assistive technology (AT) has to get information and listen for events happening in any software running on the system, to transform it into something meaningful for their users, like speech or braille.

AT-SPI is the glue between these two ends: it runs at system level, observing what’s going on with applications and keeping a global registry of the accessible objects; it receives updates from applications, which translate local ATK objects into global AT-SPI objects; and it receives queries from ATs then pings them when events of their interest happen. It uses D-Bus for inter-process communication (IPC).

You can learn more about accessibility, in general and also in the web platform, in this great interview with my colleague Martin Robinson.

The trip of a keypress event

Let’s say we are building an AT in Python, making use of the pyatspi2 library. We want to listen keypress events, so we run registerKeystrokeListener to register a callback function.

Pyatspi2 actually wraps AT-SPI’s function atspi_register_keystroke_listener, which eventually calls the remote method RegisterKeystrokeListener via D-Bus. The actual D-Bus remote calls happen at dbind.c.

We have jumped from our AT to the AT-SPI service, via IPC. The DeviceEventController interface provides the remote method mentioned above, and the actual code implementing it is in impl_register_keystroke_listener. Then, the function is added to a list of listeners for key events, in spi_controller_register_device_listener; these listeners in the list will be notified when an event happens, in spi_controller_notify_keylisteners.

AT-SPI will sit there, waiting for the events from applications to arrive. They will come over through D-Bus, as they are from different processes: the entry point for any D-Bus message in the AT-SPI core is handle_dec_method_from_idle. One of the operations, NotifyListenersSync, will run impl_notify_listeners_sync which will later call the function spi_controller_notify_keylisteners we just mentioned, and run all the registered listeners.

Who will call the remote method NotifyListenersSync? Applications will have to do it, if they want to be accessible. They could implement this themselves, but they are likely using a wrapper library. In the case of GTK+, there is at-spi2-atk, which bridges ATK signals with the at-spi2-core D-Bus interfaces so applications don’t have to know them.

The bridge sports its own callback, named spi_atk_bridge_key_listener, and eventually calls the NotifyListenersSync method via D-Bus, at Accessibility_DeviceEventController_NotifyListenersSync. The callback was registered as an ATK key event listener in spi_atk_register_event_listeners: the function atk_add_key_event_listener, which is part of the ATK API, registers the key event listener, and it internally makes use of the AtkUtil struct as defined in atkutil.h.

AtkUtil functions are not implemented by the ATK library; instead, toolkits must provide them. GTK+ does it in _gtk_accessibility_override_atk_util; in the case of Chromium, the functions that populate the AtkUtil struct are defined in atk_util_auralinux_class_init. The particular function that registers the key event listener in Chromium is AtkUtilAuraLinuxAddKeyEventListener: it gets added to a list, which will later be called when an Atk key event is processed in Chromium, at HandleAtkKeyEvent.

Are we there yet?

There are more pieces of software involved in this issue: Chromium will receive key press events from the X server, involving another IPC connection and API layer, and there’s all the browser code managing them, where the solution was actually implemented. I will cover those parts, and the actual solution, in a future post (EDIT: it’s here!).

Meanwhile, I hope you enjoyed reading this, and happy hacking!

Pingback: Event management in X11 Chromium | Jacobo's home at Igalia

Pingback: A recap of Chromium dialog accessibility enhancements | Jacobo's home at Igalia