Last month I attended the 20th edition of GORE (the Spain’s Network Operator Group meeting) where I delivered an introductory talk about Snabb (Spanish). Slides of the talk are also available online (English).

Taking advantage of this presentation I decided to write down an introductory article about Snabb. Something that could allow anyone to understand what’s Snabb easily.

What is Snabb?

Snabb is a toolkit for developing network functions in user-space. This definition refers to two keywords that are worth clarifying: network functions and user-space.

What’s a network function?

A network function is any program that does something on network traffic. What kind of things can be done on traffic? For instance: to read packets, modify their headers, create new packets, discard packets or forward them. Any network function is a combination of these basic operations. Here are some examples:

- Filtering function (i.e. firewalling): read incoming packets, compare to table of rules and execute an action (forward or drop).

- Traffic mapping (i.e. NAT): read incoming packets, modify headers and forward packet.

- Encapsulation (i.e. VPN): read incoming packets, create a new packet, embed packet into new one and send it.

What’s user-space networking?

In the last few years, there has been a new trend for writing down network functions. This new trend consists of writing down the entire network function in user-space and do not leave any processing to the kernel.

Traditionally when writing down network functions we use the abstractions provided by the OS. The goal of any OS is to create abstractions over hardware that programs can use. This happens at many levels. For instance, when dealing with a hard-drive we don’t need to think of heads, cylinders and sectors but use a higher level abstraction: the filesystem. Networking is another layer abstracted by the OS. As programmers, we don’t deal with the NIC directly, instead we work with sockets and have access to APIs to deal with the TCP/IP stack.

However, the addition of higher level abstractions implicitly adds an overhead to the processing of our network function. The first disadvantage is that the function is split in two lands: user-space and kernel-space, and switching between both lands has a cost. And even if we move as much logic as possible to the kernel, there are inherit costs caused by the kernel’s networking layer.

The need of skipping the kernel and program network functions entirely in user-space was triggered by the continuous improvement of hardware. Today is possible to buy a 10G NIC for less than 200 euros. Soon the idea of building high-performance network appliances out of commodity hardware seemed feasible. Someone could pick an Intel Xeon, fill in the available PCI slots with 10G NICs and expect to have the equivalent of a very expensive Cisco or Juniper router for a fraction of its cost.

If we drive the hardware described above entirely with Linux we won’t be able to squeeze all its performance. Every packet hitting the NICs will have to go through the kernel’s networking layer and that has a cost caused by all the operations the kernel does onto packets before they’re available to manipulate by our program. To understand how much this is a problem, I need to introduce the concept of budget in a network function.

Know your network function budget

If we want to make the most of our hardware we generally would like to run our network function at line-rate speed, that means, the maximum speed of the NIC. How much time is that? In a 10G NIC, if we are receiving packets of an average size of 550-bytes at the maximum speed then we’re receiving a new packet every 440ns. That’s all the time we have available to run our network function on a packet.

Usually the way a NIC works is by placing incoming packets in a queue or buffer. This buffer is actually a ring-buffer, that means there are two cursors pointing to the buffer, the Rx cursor and the Tx cursor. When a new packet arrives, the packet is written at the Rx position and the cursor gets updated. When a packet leaves the buffer, the packet is read at the Tx position and the cursor gets updated after read. Our network function fetches packets from the Tx cursor. If it’s too slow processing a packet, the Rx cursor will eventually overpass the TX cursor. When that happens there’s a packet drop (a packet was overwritten before it was consumed).

Let’s go back to the 440ns number. How much time is that? Kernel hacker Jesper Brouer discusses this issue on his excellent talk “Network stack challenges at increasing speed” (I also recommend LWN’s summary of the talk: Improving Linux networking performance). Here’s the cost of some common operations: (cost varies depending on hardware but the order of magnitude is similar across different hardware settings)

- Spinlock (Lock/Unlock): 16ns.

- L2 cache hit: 4.3ns.

- L3 cache hit: 7.9ns.

- Cache miss: 32ns.

Taking into account those numbers 440ns doesn’t seem like a lot of time. System calls cost is also prohibitive, which should be minimized as much as possible.

Another important thing to notice is that the smaller the size of the packet, the smaller the budget. On a 10G NIC if we’re receiving packets of 64-byte on average, the smallest IPv4 packet size possible, that means we are receiving a new packet every 59ns. In this scenario two straight cache misses would eat the whole budget.

In conclusion, at these NIC speeds the additional overhead the kernel networking layer adds is non trivial, but significantly big enough to affect the execution of our network function. Since our budget gets reduced packets are more likely to be dropped at higher speeds or at smaller packet sizes, limiting the overall performance of our network card.

NOTE: This is a general picture of the issue of doing high-performance networking in the Linux kernel. The kernel hackers are not ignorant of these problems and have been working on ways to fix them in the last years. In that regard is worth mentioning the addition of XDP (eXpress Data Path), a kernel abstraction to execute network functions as closer to the hardware as possible. But that’s a subject for another post.

By-passing the kernel

User-space networking needs to by-pass the kernel’s networking layer so it can squeeze all the performance of the underlying hardware. There are several strategies to do that: user-space drivers, PF_RING, Netmap, etc (Cloudflare has an excellent article on kernel by-pass, commenting several of those strategies). Snabb chooses to handling the hardware directly, that means, to provide user-space drivers for the NICs it supports.

Snabb offers support mostly for Intel cards (although some Solarflare and Mellanox models are also supported). Implementing a driver, either in kernel-space or user-space, is not an easy task. It’s fundamental to have access to the vendor’s datasheet (generally a very large document) to know how to initialize the NIC, how to read packets from it, how to transfer data, etc. Intel provides such datasheet. In fact, Intel started a few years ago a project with a similar goal: DPDK. DPDK is an open-source project that implements drivers in user-space. Although originally it only provided drivers for Intel NICs, as the adoption of the project increased, other vendors have started to add drivers for their hardware.

Inside Snabb

Snabb was started in 2012 by free software hacker Luke Gorrie. Snabb provides direct access to the high-performance NICs but in addition to that it also provides an environment for building and running network functions.

Snabb is composed of several elements:

- An Engine, that runs the network functions.

- Libraries, that ease the development of network functions.

- Apps, reusable software components that generally manipulate packets.

- Programs, ready-to-use standalone network functions.

A network function in Snabb is a combination of apps connected together by links. The Snabb’s engine is in charge of feeding the app graph with packets and give a chance to every app to execute.

The engine processes the app graph in breadths. A breadth consists of two steps:

- Inhale, puts packet into the graph.

- Process, every app has a chance to receive packets and manipulate them.

During the inhale phase the method pull of an app gets executed. Apps that implement such method act as packet generators within the app graph. Packets are placed at the app’s links. Generally there’s only one app of think kind for every graph.

During the process phase the method push of an app gets executed. This gives a chance to every app to read packet from its incoming link, do something with them and likely place them out their outgoing link.

Hands-on example

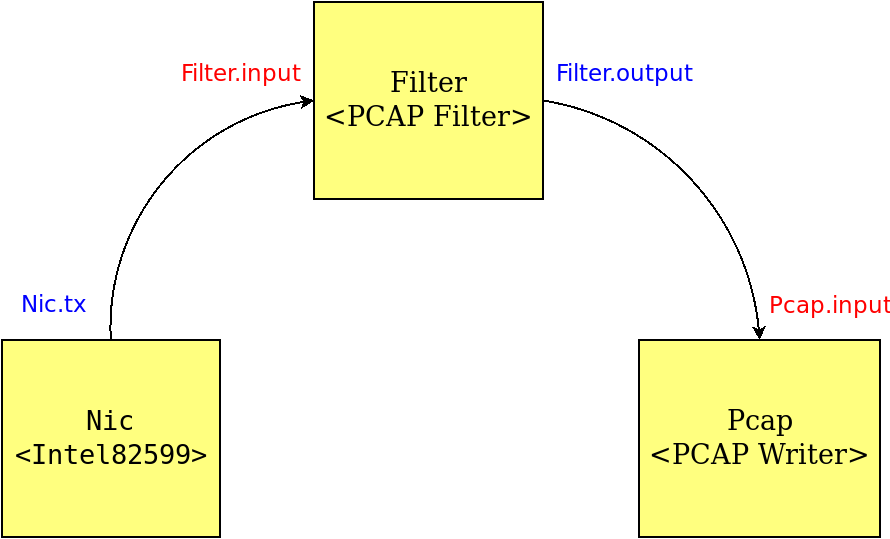

Let’s build a network function that captures packets from a 10G NIC filters them using a packet-filtering expression and writes down the filtered packets to a pcap file. Such network function would look like this:

In Snabb code the equivalent graph above could be coded like this:

function run()

local c = config.new()

-- App definition.

config.add(c, "nic", Intel82599, {

pci = "0000:04:00.0"

})

config.add(c, "filter", PcapFilter, "src port 80")

config.add(c, "pcap", Pcap.PcapWriter, "output.pcap")

-- Link definition.

config.link(c, "nic.tx -> filter.input")

config.link(c, "filter.output -> pcap.input")

engine.configure(c)

engine.main({duration=10})

endA configuration is created describing the app graph of the network function. The configuration is passed down to Snabb which executes it for 10 seconds.

When Snabb’s engine runs this network function it executes the pull method of each app to feed packets into the graph links, inhale step. During the process step, the method push of each app is executed so apps have a chance to fetch packets from their incoming links, do something with them and likely place them into their outgoing links.

Here’s how the real implementation of PcapFilter.push method looks like:

function PcapFilter:push ()

while not link.empty(self.input.rx) do

local p = link.receive(self.input.rx)

if self.accept_fn(p.data, p.length) then

link.transmit(self.output.tx, p)

else

packet.free(p)

end

end

endA packet in Snabb is a really simple data structure. Basically, it consists of a length field and an array of bytes of fixed size.

struct packet {

uint16_t length;

unsigned char data[10*1024];

};A link is a ring-buffer of packets.

struct link {

struct packet *packets[1024];

// the next element to be read

int read;

// the next element to be written

int write;

};Every app has zero or many input links and zero or many output links. The number of links is created on runtime when the graph is defined. In the example above, the nic app has one outgoing link (nic.tx); the filter app has one incoming link (filter.rx) and one outgoing link (filter.tx); and the pcap app has one incoming link (pcap.input).

It might be surprising that packets and links are defined in C code, instead of Lua. Snabb runs on top of LuaJIT, an ultra-fast virtual machine for executing Lua programs. LuaJIT implements an FFI (Foreign Function Interface) to interact with C data types and call C runtime functions or external libraries directly from Lua code. In Snabb most data structures are defined in C which allows to compact data more efficiently.

local ether_header_t = ffi.typeof [[

/* All values in network byte order. */

struct {

uint8_t dhost[6];

uint8_t shost[6];

uint16_t type;

} __attribute__((packed))

]]Calling a C-runtime function is really easy too.

ffi.cdef[[

void syslog(int priority, const char\* format, ...);

]]

ffi.C.syslog(2, "error:...");Wrapping up and last thoughts

In this article I’ve covered the basics of Snabb. I showed how to use Snabb to build network functions and explained why Snabb is a very convenient toolkit to write such type of programs. Snabb runs very fast since it by-passes the kernel, which makes it very useful for high-performance networking. In addition, Snabb is written in the high-level language Lua which enormously simplifies the entry barrier to start writing network functions.

However, there’s more things in Snabb I left out in this article. Snabb comes with a preset of programs ready to run. It also comes with a vast collection of apps and libraries which can help to speed up the construction of new network functions.

You don’t need to own a Intel10G card to start using Snabb today. Snabb can be used over TAP interfaces. It won’t be highly performant but it’s the best way to start with Snabb.

In a next article I plan to cover a more elaborated example of a network function using TAP interfaces.

Please drop me a line if you have any feedback. Thanks!